Cognitive computing cloud platform for media and entertainment

Human-like comprehension for media workflow automation. The power of brain-inspired AI video content analysis makes informed decisions crucial to production automation.

Intelligent, automated, scalable video processing

We are building and adapting AI and cognitive science to bring video content analysis to the human level of comprehension. Our unique technology framework facilitates the needs of the M&E industry, automating their production workflows through the analysis of movies, sports, TV shows, and user-generated content.

Unseen contextual understanding

A potent cocktail of AI and cognitive science enables CognitiveMill™ to easily recognize the theme and context of any footage.

Proprietary technology application

The entire set of tech is built by the AIHunters team to make sure it applies to a wide variety of content types.

No third-party data required

CogntiveMill™ makes informed decisions based only on the data extracted from the footage. No need for timestamps or script extracts.

Brain-inspired computer vision

We strive to model our algorithms after the human brain, imitating the way we focus on things, analyze them, and make decisions.

Specifically designed for M&E

Our cloud platform caters to media industry professionals. It can automate the production of TV shows, movies, sports broadcasts, news coverage, social media content, and more.

Anticipating tomorrow’s demand

We optimize the efficiency of your content production pipelines with intelligent technology that brings meaningful insights.

Cognitive Computing Cloud Platform supercharges Media & Entertainment production

Media insights. At scale

Our business solution accuracy nears human levels of comprehension - and beats it in speed and scale. What you get is concentrated knowledge retrieved from the visuals that help you make informed decisions, supercharging your production efficiency.

Transforming how decisions get made

Cognitive Computing gathers and analyzes the data in a manner that previously has been only associated with humans. It includes individual technologies that replicate the cognitive functions of a human brain to match its decision-making effectiveness.

Benefit from the Cognitive AI intelligence

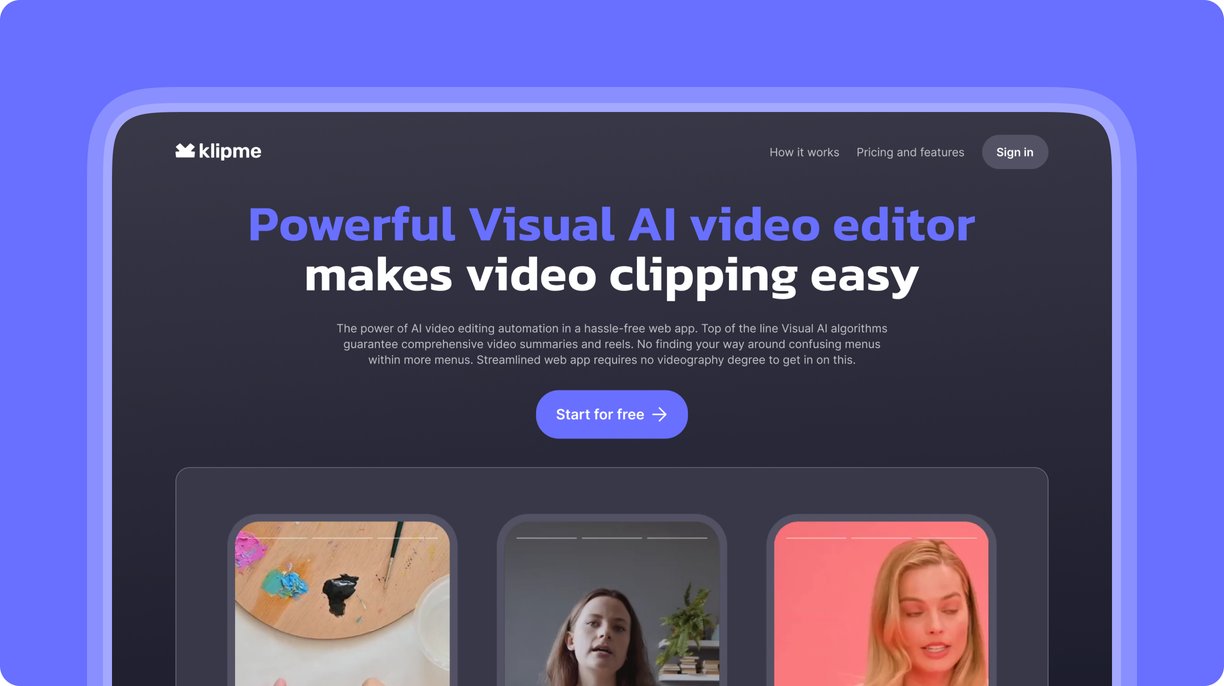

Klipme App

Klipme packs all the video analysis goodness that CognitiveMill™ offers in the form of a hassle-free web app. Same speed, same accuracy - you just log in, upload the videos, get processed clips, and move on.

Boost your video content processing by automating the tedious manual work

Cut the time your editors spend on menial tasks and have them focused on creativity.

Understand the whole video context, not just the objects

A deeper insight into video content allows for more effective and adaptive decision-making.

Deliver processed content to various channels in seconds

CogntiveMill™ analyzes and processes videos 50 times faster than a human editor.

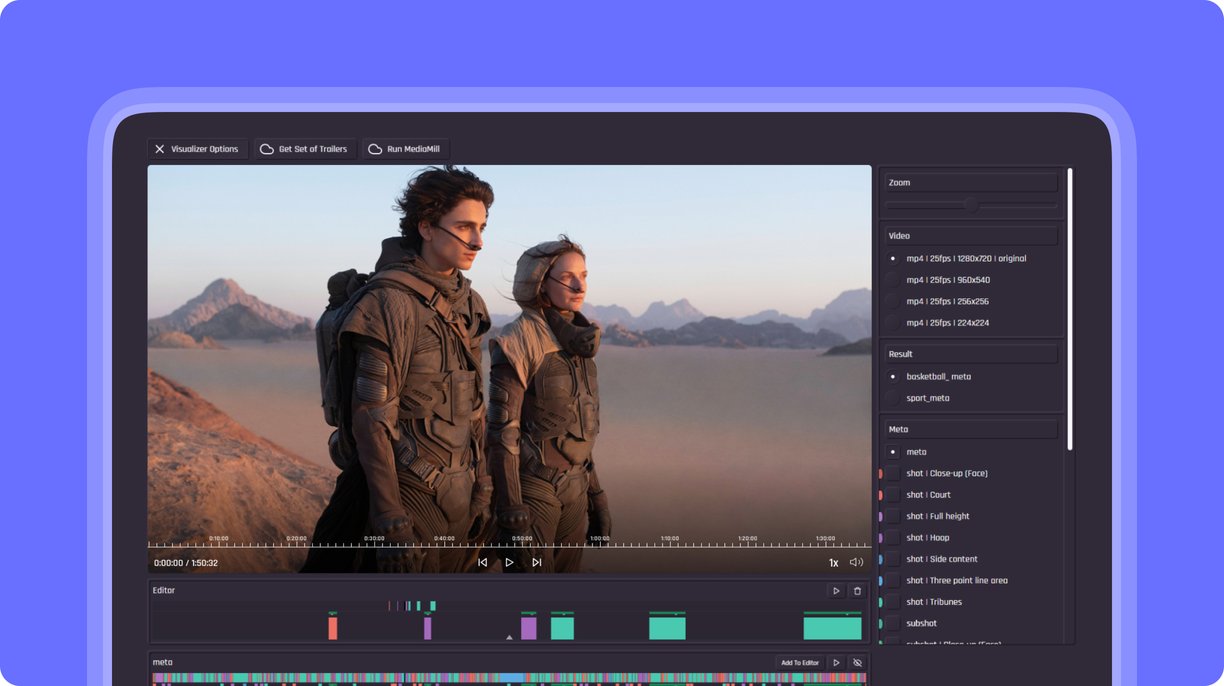

Cognitive Mill DemoUI App

CognitiveMill™ is a Cloud infrastructure scalable over hundreds of hours of media processing daily. Reliable and specifically created for the media and entertainment industry increasing loads.

We offer ready-to-go API integration alongside a convenient user-facing tool.

Get reels ready for social media on seconds

Klipme cuts the best parts from any type of footage and edits them together into clips that you can share on social right away.

Control the clips you get from Klipme

You can choose the type of editing Klipme does, apply smart cropping, add subtitles, and effect

Stay ahead of the agenda on social media

Klipme allows you to process your content much faster, enabling you to stay competitive in the online space.

Put your service in the viewer’s spotlight!

Specifically designed to cover the Media and Entertainment needs, Cognitive Mill™ is a cognitive computing cloud platform scalable for hundreds of hours of media processing daily.

By leveraging the complex algorithms of cognitive computing, our platform significantly saves money (up to 5x) and time (up to 50x) spent on video processing by reducing the working time of such customers as:

Leverage the power of Cognitive Automation to boost your content processing. Solve all the corresponding tasks tenfold faster with the Cognitive Mill™ cloud platform.

Cloud infrastructure is scalable over hundreds of hours of media processing daily. Reliable and specifically created to handle the media and entertainment industry workloads.

Ensure an exceptional video content consumption experience for your viewers with the Cognitive Automation by Cognitive Mill™.

Get rid of hours of tedious manual video content processing. Your media editing and post-production operations can be automated by Cognitive Mill™ AI.

Scalable cloud infrastructure to master on-the-go processing of live events. Our cloud’s speed and intellectuality will help you to outrun the competition in the era when content is the king.

Increase your user retention rates by boosting your viewer engagement with our AI-powered tools. Leverage AI-based automation for extended OTT experience.

Fresh publications