Horse racing tracking

Multi-object tracking applied to animals

The problem of multi-object tracking has been essential in the area of public surveillance. There are plenty of various methods to track multiple objects.

In most cases when we talk about tracking we mean people tracking. Partly because of the additional identification many of the methods use. Or because of some methods that aren’t precise when it comes to tracking similar objects.

Here we decided to compare two of many conceptual approaches to multi-object tracking — neural networks-based trackers (on the example of Re3); and MCMC data association-based (on the example of its only practical realization). Let’s consider which of multiple objects tracking options can be the most precise.

Firstly, let’s apply the neural networks-based tacker described in our article on AcurusTrack. This method is, in fact, a classic tracking algorithm in that sense that it gets a detection from N-th frame as input. And completes it by itself on the consequent frames: N+1, N+2, …, N+k.

As we already described in the AcurusTrack article, Re3 is a pretty powerful tracker among all the present ones. Nevertheless, when applied to this problem, we can see a number of its disadvantages such as:

The tracker doesn’t stop even when it loses an object:

The tracker is often oriented not on an object itself but rather on a background. In this example you can see how the second bounding box includes a considerable amount of the background with a horse:

When tracking similar objects or encountering occlusions, the tracker often combines all the objects into one. As seen in this example, even on a small scale:

Multi-object tracking mode predicts the object’s positions by analyzing all of them at the same time. This results in its unpredictable behavior and interdependent outcomes being interdependent:

We tried to apply AcurusTrack to horse racing

Let's try to apply the AcurusTrack component to the current task.

First of all, we note that for the AcurusTrack to work, it is necessary to have detections of the object you’re aiming at on each frame. For that, we use YOLO algorithm.

Application of the AcurusTrack to horse racing has its features. For example, the detection doesn’t work as well as it would be necessary because:

- It often shifts from one area to another, moving very hard and sometimes detecting a horse with a rider, sometimes separately. You can try to solve this problem by working only with the X coordinate. And for most videos with horse racing it works due to the specifics of the movement and shooting.

- Shifts very sharply and detects a horse partially. We didn’t encounter this when we were working with face detection. The detection in that case was quite stable (it either detected a face or not, but there was no partial detection). And in case with pose detection we filtered some results (e.g. when one arm or leg was detected separately). Horses are detected with a bounding box, so there’s no way of knowing whether a horse has been detected correctly. We can see that the coordinates shift very sharply making it hard for Kalman filter to adapt to them.

- On the videos similar to the following one, the camera does not keep up with the movement of the horses. This way, in a fixed coordinate system horses run in one or the other direction depending on the movement of the camera.

Based on the issues stated above, we will need to apply the camera stabilization component designed by AIHunters — EvenVizion.

EvenVizion improvements for horse racing application

Let’s apply the current version of the EvenVizion algorithm to our cases. As seen, the result we get is unexpected:

This happens due to the fact that EvenVizion clings to moving objects — horses. Here are some examples of matching:

Let's try to filter out the points that are positioned on moving objects and on static ones (the logo). This way we get such filtration visualizations:

The fixed coordinate system that we get after these actions looks this way:

Here you can see that the coordinates in the fixed system shift sharply. Especially at those moments when objects that EvenVizion could catch on (signs, special elements of the fence) disappear from the frame and only grass remains. Yet, this is an explainable limitation.

The system itself now performs in a more predictable way because:

- We excluded the points on moving objects and logo from the processing. These points are not representative, telling nothing about camera movement.

- Now the EvenVizion algorithm considers only the parameters of stationary objects.

- You can see the real path that the horses run.

Leaps at some moments happen because there are no objects by which you can estimate the movement in the frame. Even for a human it is hard to see the movement. Some kind of an optical illusion is created (e.g. it is hard to tell in which direction horses move on segments where there is only a white fence and horses). EvenVizion begins to link different points as the same one (because they all look the same way, the white fence consists of repeating elements), and a jump occurs.

The following video example is of particular interest. Here you can see clearly how the system stopped jumping and moving in another direction:

In videos with a rather varied background the effect of this is not so noticeable since there are many foreign objects that EvenVizion can catch and work on without using moving objects.

Now we are going to apply AcurusTrack to what we have to this point.

Applying AcurusTrack again after the improvements

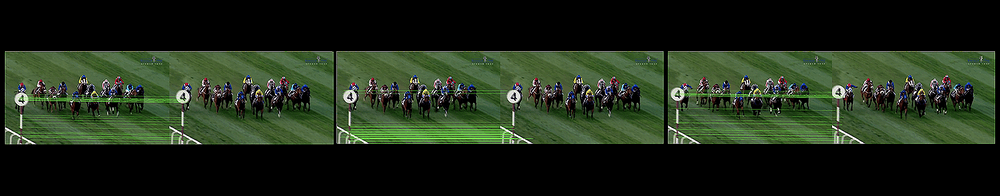

Here we have the working example of AcurusTrack in its multi-scan version (a basic version):

The basic version of AcurusTrack works well as expected. It tracks objects well, copes with occlusions, if objects move in opposite directions or have different dynamics of movement.

Some situations where cross-IDs can be created:

- When objects move in parallel, identically, in the same direction.

- If detection is substandard.

- When objects are united by one bounding box and continue moving like this for some time.

The situation where ID-duplicates can be created:

- When horses run towards a camera (acceleration) — duplicates are created expectedly.

- If there are sudden jerks of a camera.

Now we are going to consider a single-shot version described in the article about MCMCDA.

Its main advantages are:

- Real-time: the algorithm analyzes a video only once.

- The algorithm leverages the knowledge of the number of objects, thus aiming at reducing the number of duplicates.

However, single-shot performed worse than the basic version of AcurusTrack, as tracklets disappear over time. This happens due to the detection and horses specifics, described above.

Also, we can see the significant shifts of bounding boxes’ centers because, as said before, we often detect not a whole object but just a part of it. Because of this, the movement becomes inconsistent, ragged, and sharp. In the multi scan version, these jumps of an object’s center were not so noticeable in the overall picture, largely due to preprocessing and initialization. Here they began to play a very important role and influence the fact that the track breaks off.

This problem cannot be solved by changing the configuration parameters, for example, by reducing the sensitivity, since in this case the number of cross-IDs will greatly increase in cases where objects are close to each other.

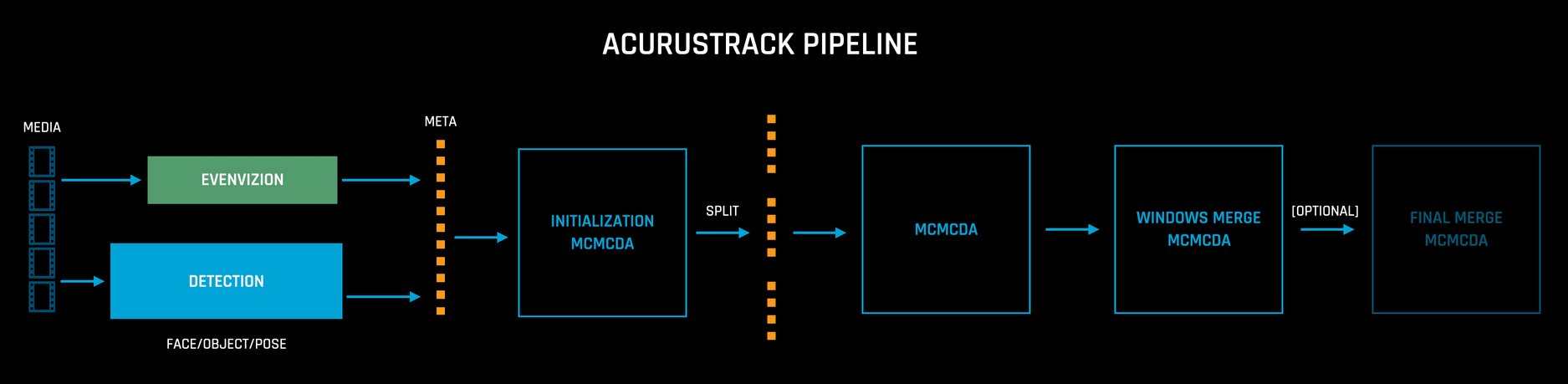

The AcurusTrack pipeline

To summarize the sequence of steps we took to track horses on racing videos:

- Detect objects with YOLO;

- Apply EvenVizion to transfer to a fixed coordinate system;

- Filter the points on moving objects and logo out;

- Apply AcurusTrack in its multi-scan version.

You can see a general conceptual visualization of the AcurusTrack pipeline below:

In conclusion

The task of multi-object tracking is rather a common one in the Public Surveillance domain. To handle a complicated multi-occlusion type of movement and track objects well, AIHunters introduced an open-source component — AcurusTrack.

To our mind, there are many use cases in which this technology can also be used. For one, we have considered a use case of multi-object tracking in horse racing. With the help of such processing, we can control stationary cameras on the field which can automatically track the object they need.

AcurusTrack can be applied to virtually any sport and competition where it is essential to track a separate person, animal or car. By eliminating human effort, production automation at scale can be achieved.

As we at AIHunters pay close attention to media and entertainment industry tech, you can expect this story to be continued. Stay tuned and don’t miss our updates!