Catching feelings: sentiment analysis in video content

Let me tell you something.

We’ve got pretty far in teaching technology to perceive and analyze different things.

It recognizes objects on both images and video, understands spoken and written words, analyzes all kinds of data, and even makes decisions.

And In the quest of bringing machine intelligence closer to the likes of the human brain, engineers from all over work tirelessly to expand its capabilities.

For example, teaching it to understand sentiment and emotions.

Can AI actually perceive emotions? And most importantly, can we use it to our advantage in the M&E industry?

Let’s find out.

Why do we need video sentiment analysis?

Let’s get the simple question out of the way: yes, the media industry can benefit a great deal from video sentiment analysis and emotion detection technology.

In striving to provide a better experience to the audience, media companies use every bit of data they can get their hands on to cater to said audience.

And sentiment may become a big part of that.

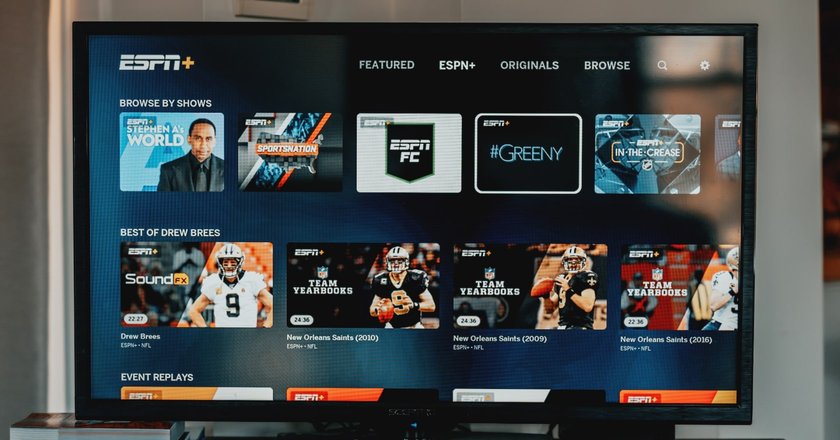

Now, we have systems recommending content to viewers based on their likes, and the content they have watched before.

But imagine adding the emotion detection into the mix: having analyzed the sentiment of the content, you can have a streaming platform that recommends melancholic dramas for cozy nights, or off-the-rails game shows for evenings with friends.

This is a sure way to get people returning to your platform for more.

The sentiment can also help with video content production: you get the sentiment of each scene in a movie, and make suspenseful trailers, or the ones packed with action.

Zoom calls are also a great way to implement emotion detection. You can get a lot of info about people's reaction to the agenda on the call, helping sales managers improve their pitches.

And don’t forget sorting! The more data you have about the content, the easier it is to find and manage said content.

Nuance of the emotion detection

Before we begin talking about technology, let’s establish one thing: video sentiment analysis is a difficult thing to do, and it cannot be replaced with any type of workarounds.

You can’t just apply object recognition or natural language processing and expect the technology to identify complex emotions.

Wanna know more about Cognitive Computing?

For instance, the system will be able to identify a jump-scare by “hearing” the main character scream, but what about the intense questioning scenes in crime movies? Or the heavy-hitting and revealing conversations in dramas? Suspense-filled scenes in thrillers?

In those cases, the viewers can feel the emotion — it’s thick, but it’s subtle at the same time. There are no screaming or red faces to identify.

That directly hinders the efficiency of AI automating content production. Since it can’t detect sentiment, it can’t produce clips with said sentiment in the center.

So, you don’t get your suspenseful thriller trailers, your cheerful home runs, and so on.

What about the mood-based content recommendations delivered by those workarounds? Yeah, forget about that as well.

How can we approach sentiment analysis in video content more effectively then?

What is video sentiment analysis?

Sentiment analysis refers to a set of technology aiming at identifying people’s feelings through text, audio, or video recordings.

The system usually classifies the content by giving it a score signifying a particular sentiment. It can be a probability score: then, the software says that it's 65% sure that the content is positive, 40% sure it’s neutral, and 25% sure it’s negative.

Share your thoughts on emotion detection!

Another widespread system is a spectrum represented by a number from -1 to 1.

Here, each number describes three sentiments:

- -1 — negative;

- 0 — neutral;

- 1 — positive.

In this case, we feed the system, let’s say, a piece of text, and the software returns a number of 0.8. We interpret that as a positive sentiment, since 0.8 is closer to 1 than it is to a -1.

So, we have AI doing that.

It can say whether a piece of content articulates a positive, negative, or a neutral message. It works with several types of content as well, including text, audio, or video.

Now we also got ourselves video sentiment analysis APIs to work with — you do not need to build the solution from scratch, it’s the plug-and-play type of thing.

Great, right?

Well, it’s complicated.

Ain’t feeling it: sentiment analysis challenges

As I’m sure you have realized already, sentiment analysis for video has little to do with actual emotion detection.

It is surely useful for telling the intended tonality of the content, but it lacks the nuance that comes with identifying emotions. Yes, it is miles better than AI analyzing audio, waiting for somebody to yell some zingers as they witness the tackle of the generation.

But it’s not an emotion analysis.

So, how has the community approached emotion detection with technology?

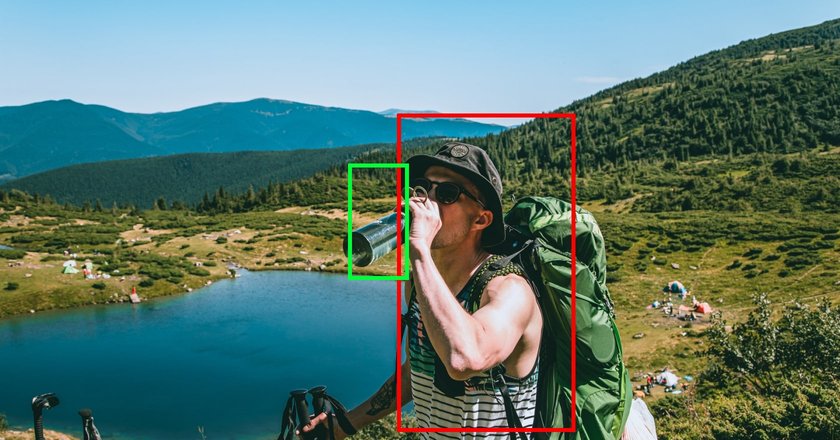

Facial sentiment analysis

Facial features can give a lot of information about the emotional state of the person. For humans, it’s a primary non-verbal way of communicating between each other — so we can try to analyze those to enable the machine to detect emotions.

Here’s how the researchers went about it.

They have built a system that tracks the elements of expression to identify emotions. Those elements can include the changing lip height, angle of the eyebrows.

But that method of facial sentiment analysis has proven to be not that efficient: facial parts have less explicit boundaries, making it harder for a computer to analyze them properly.

So, the researchers went a bit deeper.

Facial muscles

Another method of facial sentiment analysis focuses on having the machine identify the facial muscles and track the movements of those muscles in order to predict a certain emotion.

The primary muscles that the software keeps track of are:

- Orbicularis Oris around the lips;

- Venter Frontalis on the forehead;

- Buccinator, Zygomaticus and Angli Oris to the mouth.

The system then tracks the direction and the average length of muscle contractions, along with the velocity at which the contractions occur when a person expresses an emotion.

Interested in advanced AI tech?

That’s the data gathering part. Now, let’s see how the machine analyzes that data.

Expression recognition

To work with simple facial expressions, the researchers analyze the changing velocities of muscle movement. The machine uses changes in velocity to recognize the movement patterns and associate them with facial expression elements.

The algorithm can catch changing facial expressions and then interpret them as emotions, but the results may be hindered because of the absence of stable facial points.

Basically, it tracks the velocity and length of muscle contractions against other moving muscles.

No bueno.

Neural networks

Then came the neural networks.

Here, the majority of them analyze the faces on static images, trying to associate facial expressions with particular emotions.

But there is another problem: with some of the more subtle emotions, people can barely move their face muscles. A person can grin just a bit, or quickly twitch an eyebrow — those are much harder to differentiate between.

Where does it leave us with video sentiment analysis and emotion detection?

As we have established, we’ve got a bit of a problem here in terms of making technology understand sentiment and emotions.

The recent developments on the matter a based on the Plutchik emotion theory, striving to detect 8 basic emotions:

- Joy vs sadness;

- Trust vs disgust;

- Fear vs anger;

- Anticipation versus surprise.

Which means that, for now, we can forget about complex emotions and tonalities.

But even so, the recognition techniques mentioned here all have issues with reference points.

People’s faces are complex things with constantly moving parts, which makes it hard to track the features of that movement.

Extract insights from your content!

Let’s say we analyze the recording of a call on Zoom. We can get a frame of a person’s face twisted a bit, but we won’t be able to tell whether the person is expressing an emotion or just making faces.

Maybe they’re bored and all.

We need a reference point — a basic emotion, based on which we can track anomalies and categorize them.

Contextual analysis can help with that. We can study the way a person expresses their emotions in some kind of regular environment and then analyze the emotions in accordance with their regular behavior.

That can help us differentiate between a regular laughter and, let’s say, a hysterical one.

Think about the applications of sentiment analysis in video, and you get even more problems.

When it comes to analyzing the video content, whether those are sporting events, video call recordings, or movies, the question that comes to mind is what we need the video sentiment analysis for.

For instance, we can use sentiment to recommend the content to viewers based on the desired mood. But then, we would need to analyze not only the faces of cast members but also the coloring of the movie, the plot structure, dialogue, score, and everything else that contributes to the film’s overall vibe.

Actually, that’s what we do when picking apart the context of video content. That helps us understand the content on a deeper level and provide automation solutions that deliver consistent results, like:

- Trailer generation;

- Highlights creation;

- Conference call analysis (TBA).

So here we go — facilitating each use-case means building and re-building algorithms to enable the analysis of factors specific to the particular use-case.

And learning to identify complex emotions would be nice.