How we track identical objects with the probabilistic data association approach

Since neural networks obtained their popularity, virtually any engineer rushed to Deep Learning techniques to solve the tasks set in front of public safety software. However, we see it this way: neural networks can be incomparable in terms of detection and identification, but they can’t be as effective in the analysis. It is way too hard to create dependencies inside the ‘black boxes’ of neural networks. Thus, to our mind, in tracking it’s more effective to use measurable and predictable approaches (such as probabilistic data techniques) to analyze the sequence of frames and the objects on them with their corresponding speed and position.

In this article, we do not claim to invent anything new. We gather some already existent scientific approaches and insights and create a practical realization of those approaches.

About the task of tracking

Tracking is one of the most common and important challenges in the public surveillance area. This is determined by the need to know where the objects are in each moment of the video we’re analyzing. The classical detection algorithms aren’t solving this issue.

When we apply the object detection algorithm to the first frame of the analyzed video segment, the object’s presence and position on the frame are determined, or the area of interest is just highlighted. The task of tracking, in turn, is to find the position of the selected object in subsequent frames.

The main difficulties that tracking algorithms may face are occlusions (the tracker can easily switch to another object during occlusion, or lose the object that needs to be tracked), zooming, object rotation, changing lighting, motion blur, etc. And even though the classical algorithms are aimed at solving this problem, quite often they fail to do so. This happens due to the difficulties in adaptation and configuration or those algorithms. Neural networks-based algorithms, in their turn, cope with the task of tracking better but are really hard to be interpreted and controlled.

That is why we are offering an option of the highly-interpretable tracker based on the movements’ physical realism analysis.

Classic approaches

Most of the available tracking algorithms consider the problem of tracking a single object in a video captured by a static camera. In the case of object tracking in a video shot on a moving camera, additional difficulties arise.

There are many tracking algorithms such as:

- trackers based on correlation filters;

- detection-based trackers (used in popular dlib library);

- graph-based methods;

- neural network-based trackers - one of the best we’ve tried for moving cameras, occlusions, fast movement so far.

For a more detailed comparison of correlation approaches and trackers based on recurrent neural networks, you can see this article.

Nevertheless, all the trackers mentioned above (except for neural networks-based ones) have virtually no way of coping with scaling, occlusions, objects rotation. And even if they do, they do not always show satisfactory results. Neural networks-based algorithms mostly solve the task of single object tracking, leaving objects interactions, and possible options aside.

Problem statement

Let’s consider the issue of tracking N objects in a video captured by a moving camera. Let’s name the sequence of the frames that we consider F_1, …, F_N. Each of these frames has the detections D_1, …, D_k, k<=N. The task is to assign the index i=1,…,N, to each detection — namely, a certain id.

Firstly, we move from individual detections to short tracklets, preprocessing the existing metadata on the basis of some similarity metric (for example, when working with faces, it can be iou — intersection over union — detections on k and k-1 frames, in the case of working with the body) it can be the sum of the differences in the coordinates of the corresponding parts of the body.

Auxiliary visualization

Before describing the algorithm that we use, let’s consider the introduced visualization, which is designed to facilitate the algorithm’s testing, debugging, and performance analysis. We want to inject time dependency into consideration.

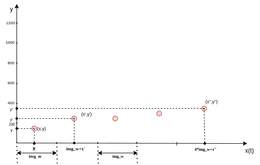

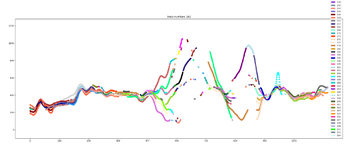

To do this, we draw the coordinates of detections as follows — on the OY axis we put off the y coordinates of objects, and on the OX axis — the x coordinates, but taking into account the number of the frame that we are considering. Thus, the object with coordinates (x, y), viewed on the i-th frame, will be displayed in the figure in the point (i * img_w + x, y), where img_w is the image width.

To improve the quality of any tracking algorithms on a video shot by a moving camera, we recommend using our component for the transition to a fixed coordinate system — EvenVizion.

After transitioning to fixed coordinates, we are faced with the classical multitracking task. We consider the direction of data association-based methods to be especially attractive for us.

Thus, we get preliminary initialization, a view with which we will continue to work.

We got inspired by the article describing the Markov chain Monte Carlo data association. For fairly simple cases we release a slightly shorter version. But if you are interested in a full version, feel free to contact us at oss@aihunters.com for more information.

The main advantages of this approach:

- we embed knowledge about the quality of auxiliary DL components through configurable parameters;

- we work with objects of arbitrary nature. We do not use identification, therefore we are not tied to persons, for example. We can work with objects that are very similar in appearance (with the presence of occlusions, classical tracking algorithms work poorly);

- we use a likelihood analysis based on the physical interpretation of movement (with Kalman filter, described here and here);

- the ability to parallelize video processing;

- the ability to use CPU after receiving metadata.

Thus, to summarize the sequence of steps described above:

- Firstly, we get the detections on existing video;

- Secondly, we transfer coordinates to a fixed coordinate system using the EvenVizion component;

- Then we perform the preprocessing to extract objects’ metadata;

- Finally, we launch the algorithm.

Use cases

Complicated multi-occlusion movement

Medical face masks

Identically-dressed people with faces fully covered

People in identical military uniform

Visitor analytics for physical locations

Feel free to suggest yours.

Possible improvements

We reckon that these improvements may be introduced in the upcoming versions of this software:

- Processing of the cases of long disappearance from the frame. This project does not take into account the long-term link. Here, identification is needed, but pointwise only.

- Complex types of movements processing with the Kalman filter replacement.

Outro

We can see how the demand for computer vision-based public safety solutions is experiencing an upsurge. The main tasks for such software are detection and tracking, while tracking appears to be harder to maintain due to difficult filming conditions.

The AcurusTrack component is intended to beat this challenge and make objects multi-tracking as accurate as possible without relying solely on neural networks.

We achieve it by using our custom data association model, probabilistic AI, and a physical model of realistic object movements (inherent to an object in the real world).