Cognitive Mill vs. Amazon Rekognition: End Credits Detection Test Run

End credits detection services comparison

After Amazon Rekognition launched its AWS Media Intelligence features a couple of weeks ago, we at AIHunters got all excited. It was an opportunity for us to challenge ourselves and our solution in comparison to what that giant of a company has. Immediately we decided to see how Amazon's end credits detection works (for research purposes strictly).

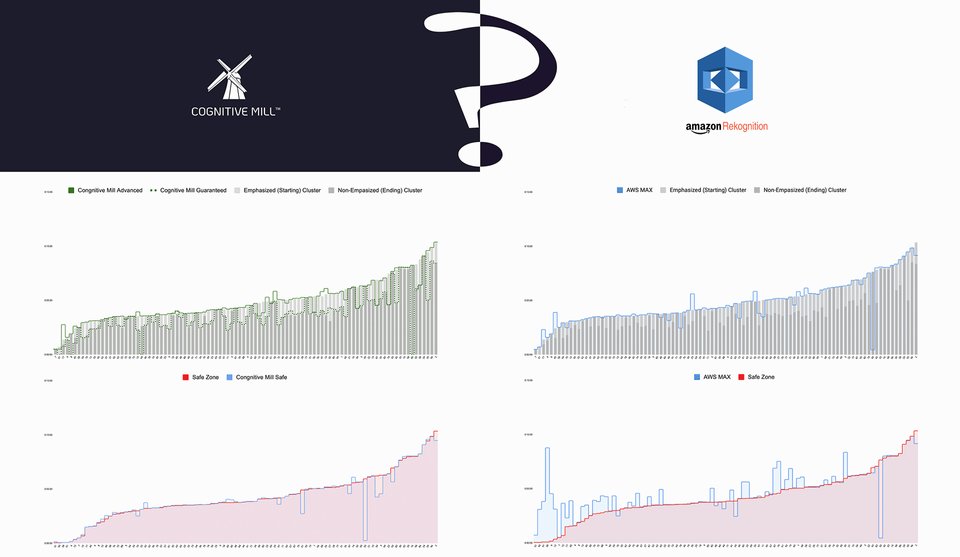

In this blog post, we would like to elaborate on the AI-based end credits detection products created by Amazon Rekognition and Cognitive Mill EndCredits Detection SAAS. We will compare which of the two solutions can recognize closing credits in movies more intellectually.

Stage 1. Dataset

First of all, we needed a good comprehensive movie dataset. This dataset was kindly granted to us by our partner — Beeline.

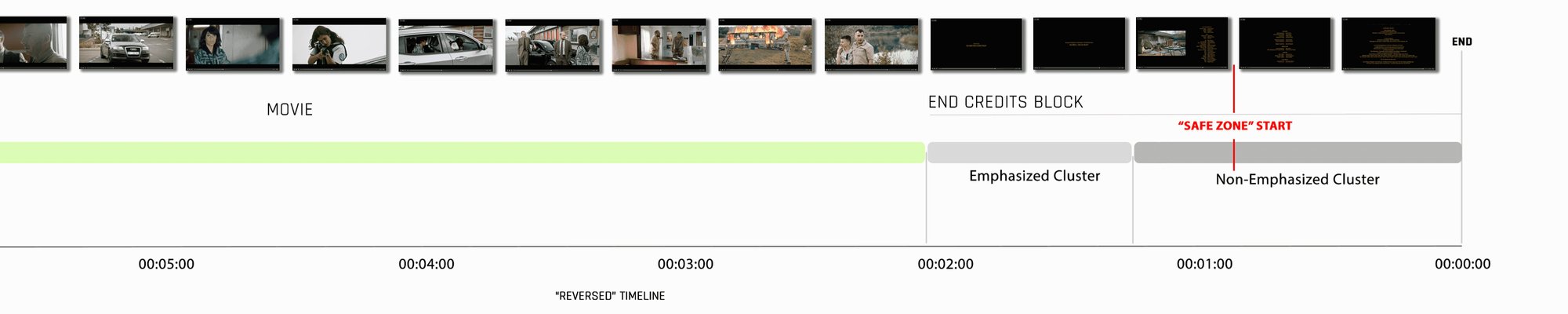

Then we marked up 98 different movies and animations manually for the most accurate test. In each movie, we picked the point where the cast’s names start rolling out, then classified the credits according to the level of interest from a viewer’s perception. To define it in our terms, we got emphasized and non-emphasized final credits sequences.

Share your thoughts!

After that, we found the point where the movie-interlinked visuals end. It allowed us to define when it is safe to ‘let a viewer go’. Meaning, to leave the current asset without losing the idea of production. We called it a safe zone.

As you can see from the picture below, we call the credits that are combined with some other visuals or written in a special way the “emphasized” and simple white-on-black text as the “non-emphasized”.

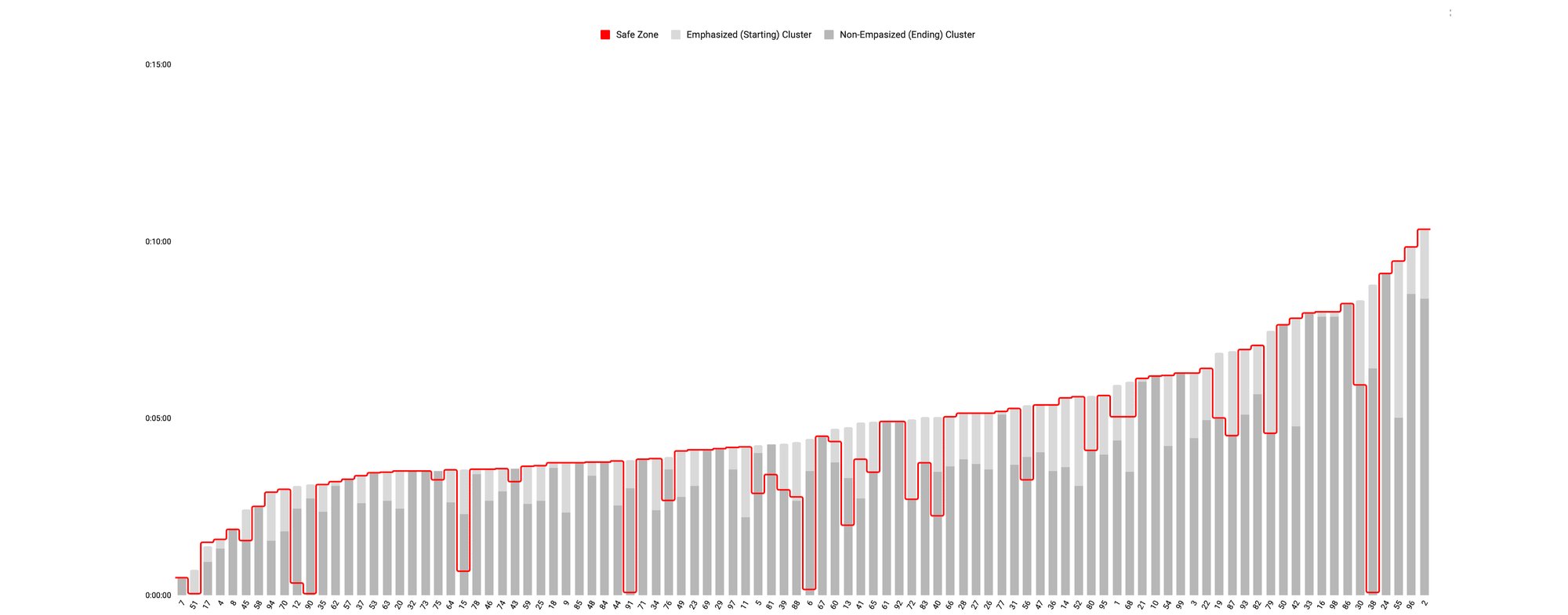

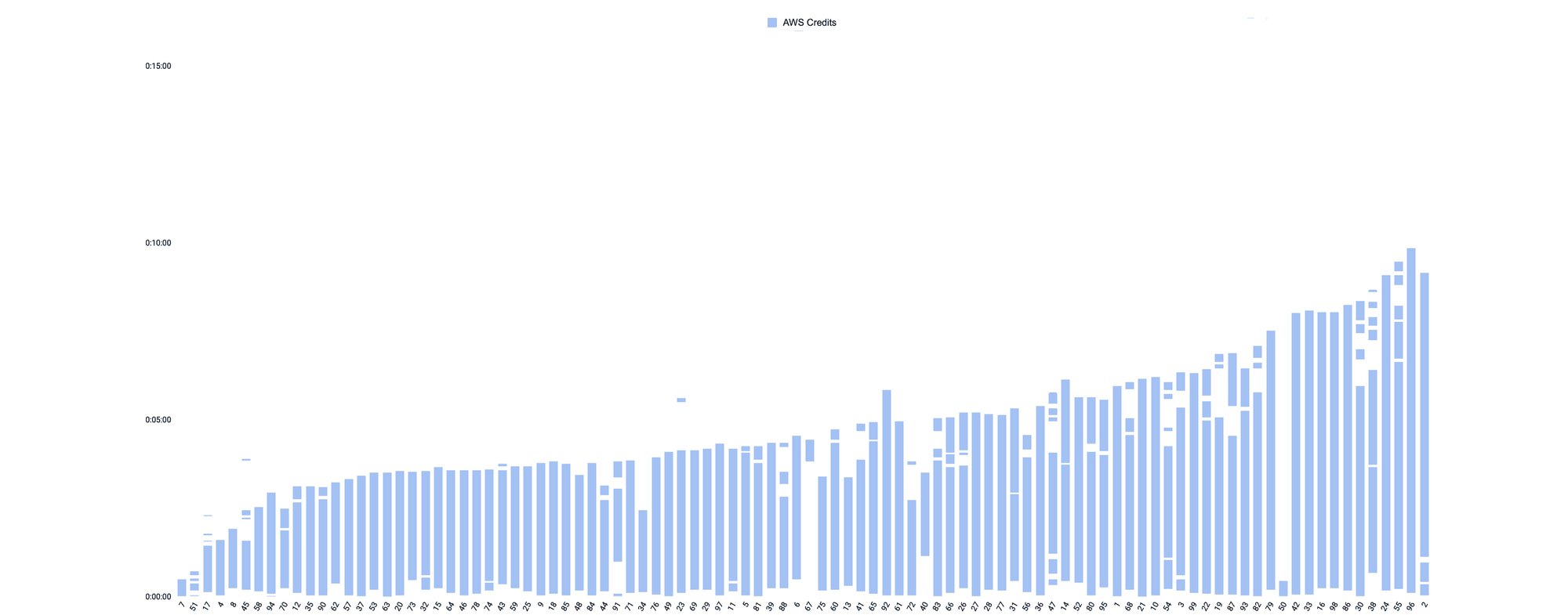

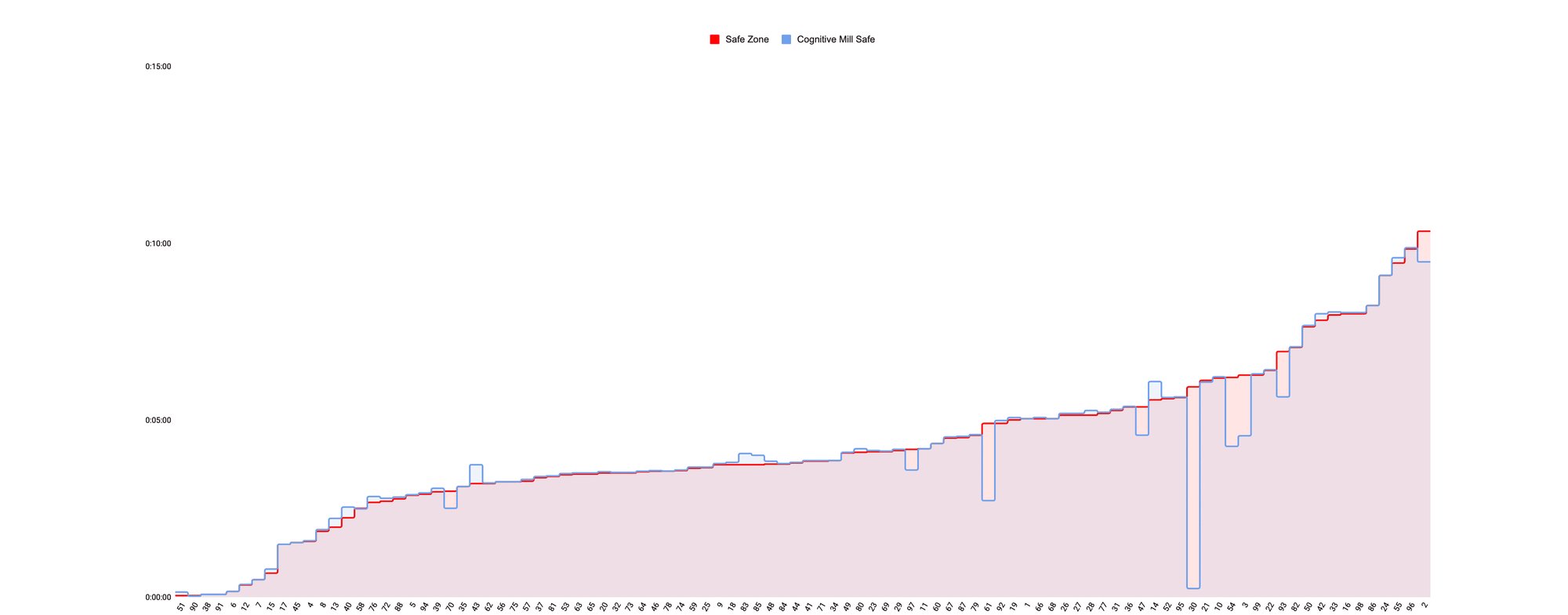

In the picture below, you can see the results of our manual markup sorted out by the length of the closing block. To speed the processing up, we only took the last 15 minutes of a movie.

On this graph, you can notice that the safe zone is often located below the end credits levels. It happens so as you can often find blocks of relevant content related to the movie itself next to the credits sequence. It wouldn’t be advisable to cut those parts out. For example, it can be Marvel’s favourite post-credits scenes, next-to-credits bloopers, or some movie-related visual design in the background.

Stage 2. AWS Rekognition: test

After compiling the dataset, we ran this 98-movie batch (unmarked) through the AWS Rekognition service. As a result, we have received a set of ragged clusters with a final credits sequence inside (you can see it on the “AWS Credits” graph). Practically every end credits detection result has gaps between the cast-describing sequences.

"Segments": [

{

"Type": "TECHNICAL_CUE",

"StartTimestampMillis": 672880,

"EndTimestampMillis": 677400,

"DurationMillis": 4520,

"StartTimecodeSMPTE": "00:11:12:22",

"EndTimecodeSMPTE": "00:11:17:10",

"DurationSMPTE": "00:00:04:13",

"TechnicalCueSegment": {

"Type": "EndCredits",

"Confidence": 87.40824127197266

}

},{

"Type": "TECHNICAL_CUE",

"StartTimestampMillis": 737160,

"EndTimestampMillis": 899560,

"DurationMillis": 162400,

"StartTimecodeSMPTE": "00:12:17:04",

"EndTimecodeSMPTE": "00:14:59:14",

"DurationSMPTE": "00:02:42:10",

"TechnicalCueSegment": {

"Type": "EndCredits",

"Confidence": 98.78292083740234

}

}

]

It has become obvious at a glance that when analyzing these numbers, AWS relies on text-oriented detection. Although, our case isn’t just a matter of symbol detection. It is a narrower classification.

Yes, we understand that AWS's service is oriented towards the developers who will need to build some kind of post-processing based on those clusters. It’ll need to be done for the adaptation to a particular business case. Business is interested in just one or a couple of numbers returned — a bare minimum that’s necessary to make action items in a viewing journey. The business doesn’t need all of these punctuated clusters for a single decision.

Would you like to discuss the article?

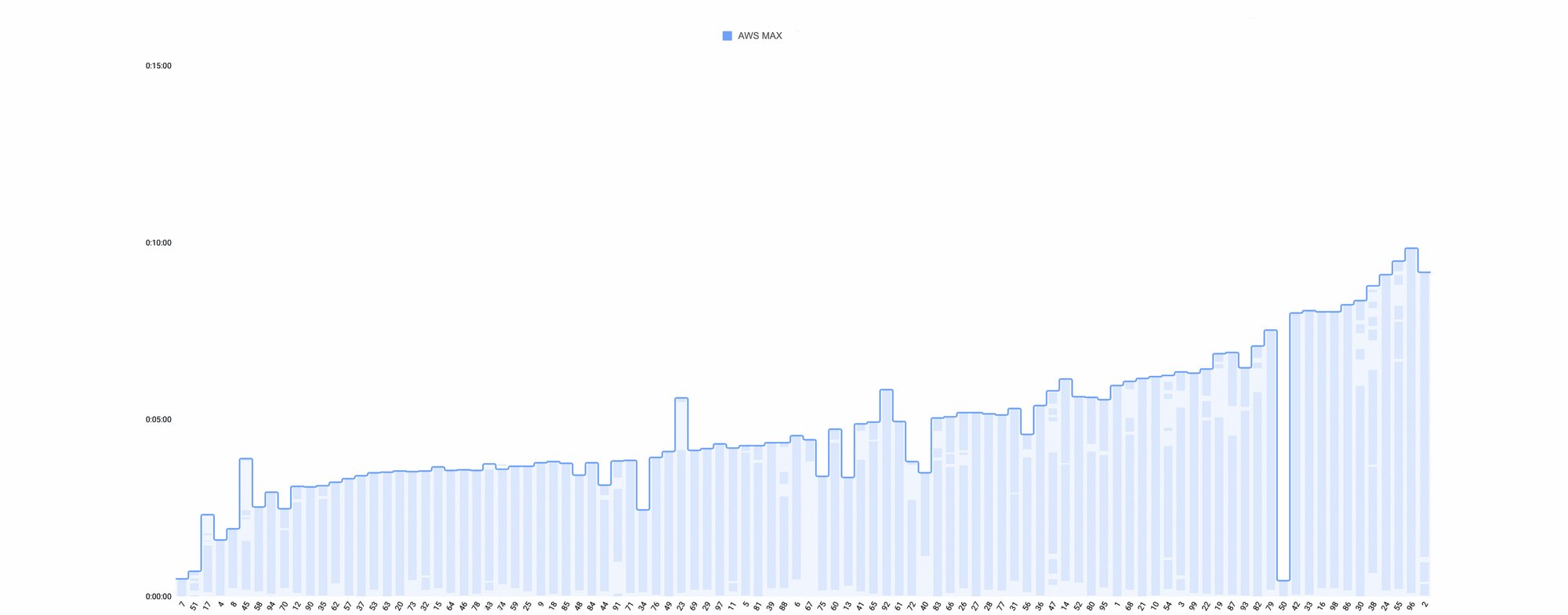

But, for the sake of the experiment, let’s imagine that we are a business-side (non-technical) client, who doesn’t have a data science department on site. We cannot afford a deep adaptation, pre-, and post-processing. We are going to consider only the earliest end credits detection marker. Let’s name it AWS MAX here. This is the number we are going to use after the integration of our hypothetical OTT platform with AWS.

Now we have 98 time markers, one for each movie from the Beeline’s dataset.

Now let’s compare those timestamps with our manual markup.

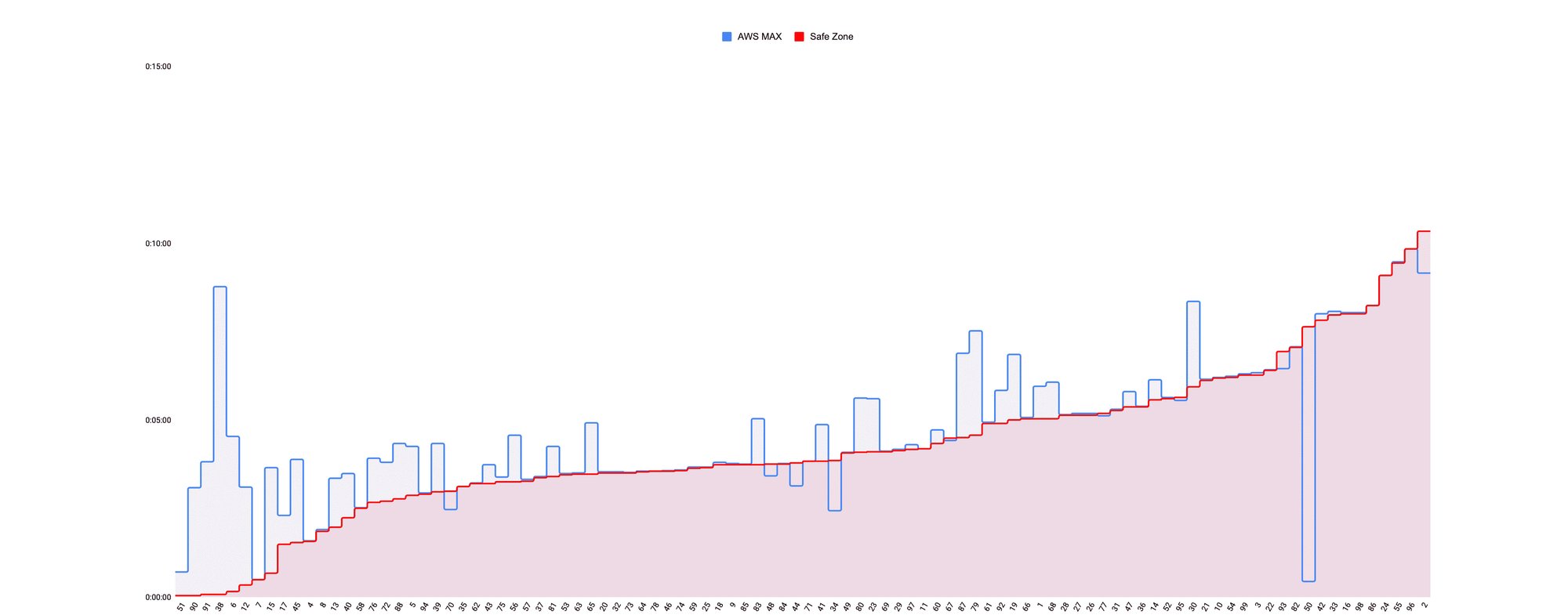

Since it is now clear that Amazon Rekognition doesn't apply any additional visual and pattern analysis, we expect some issues with the safe zone.

Indeed, on the graph below (in which the data is sorted out by the length of the safe zone) you can see the following: there are some cases where the safe line stands away from the initial detection (AWS MAX). Remembering that the timeline is reversed, we can tell that a considerable amount of content (all the blue peaks) is being cut off a viewer's experience.

The detection of the content combined with end credits is an important business-oriented feature. It is not realized in the Amazon Recognition service. That’s why, if you care about a seamless customer journey, Amazon’s service is not something you would want to employ.

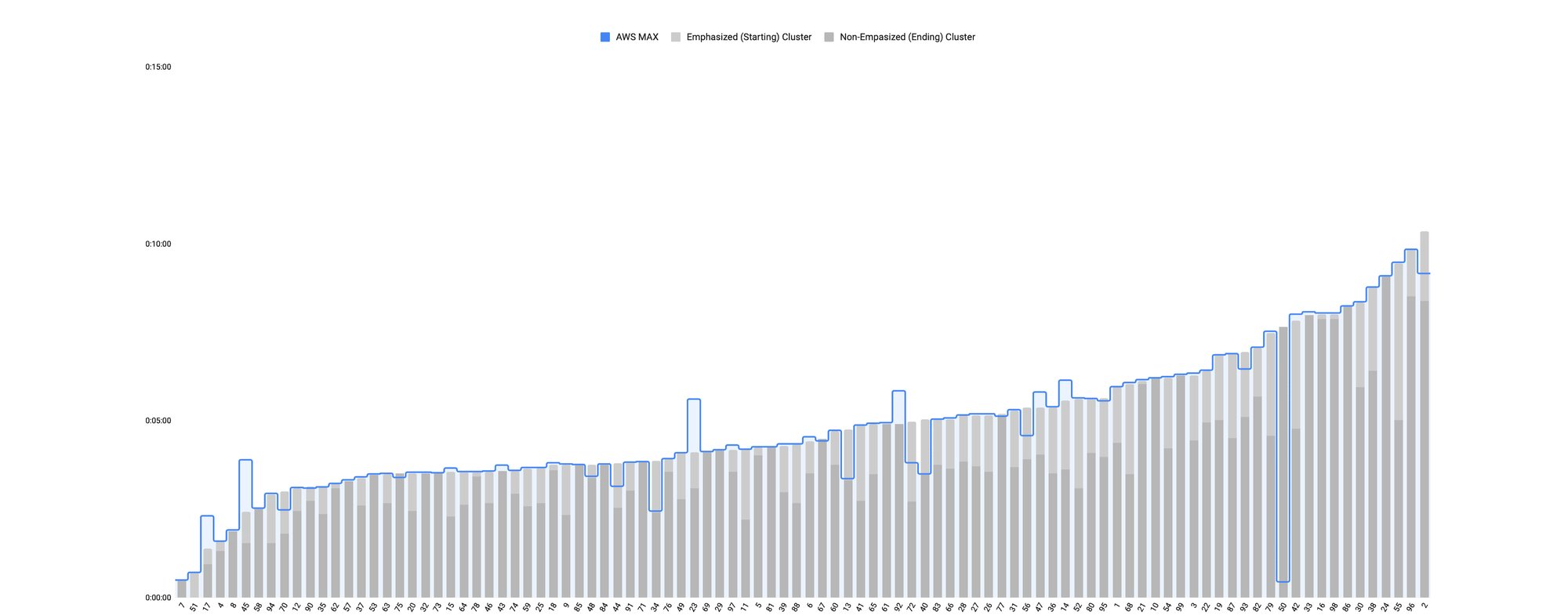

Now let’s see how this ‘first detection’ number is corresponding with the actual emphasized and non-emphasized blocks.

Again, the service acts just as it’s expected to. In the majority of cases, it reacts exactly to the beginning of the non-emphasized text blocks.

As it has been stated earlier, we are imitating the business that wants an out-of-the-box solution in this experiment. That’s why we haven’t created any confidence analysis algorithms on top of the processing. Which led to several early false positives. That could lead to a ruined viewer experience if we decided to cut off the content located after this initial timestamp.

Do you want to know more about content automation?

In fact, in the majority of cases, Amazon Rekognition will react to the first appearance of any text that looks like a closing credits sequence. For the viewing journey we’re all aiming at, metadata must be retrieved from the blocks of non-emphasized ending credits (the least interesting from a viewer’s perspective). And if you cut the credits with the first piece of text appearing on screen — well, you might lose something important.

Again, if you can employ computer vision engineers, you will be able to apply some additional detection accuracy, blocks' length, and interdependence analysis on top. This way, you can get a more detailed and business-oriented decision.

At the moment, it is way too early to say that you can use Amazon Recognition to create a smooth viewing journey. You can’t guide viewers from one piece of content to another leveraging this service.

Stage 3. EndCredits Detection SAAS of Cognitive Mill: test

We are concentrating not so much on retrieving common metadata from the media, but more on the AI-based products that allow informed business decisions. With the help of such products, you’ll have only the useful business-oriented metadata which is created by the human-like intellectual systems.

We leverage the custom pipeline that was created internally for the automated end credits analysis. Besides text blocks, it analyzes visuals, their dynamics, relevancy, and a subjective level of interest. And this all is consolidated by the AI-based decision block — the ‘brain’ that’s processing the ‘eyes’ input. This intelligent block aggregates all the knowledge retrieved from the ‘eyes’ and aims at restoring a realistic pattern and content structure around a final credits sequence. All this to meet a business case by informing a customer with several time codes that are ready-to-go.

Cognitive Mill cloud AI service returns 3 main time markers suitable for easy integration: guaranteed, advanced, and safe.

Guaranteed describes the transition to non-emphasized credits where a block of 'technical' credits starts.

Advanced aims at defining the emphasized block. It seeks a balance between the importance of credits and text blocks before the credits serving the business case of preparing a user to leave this piece of content. The accuracy of the advanced mode can be tweaked to suit the client’s business case.

Safe is a time marker that indicates the moment when it’s already safe for the viewer’s experience to leave this piece of content from a closing credits block. No part of a story (after or together with a credits block) will be skipped accidentally because of the analysis described above.

You can read more about the ways of content analysis and the related decision-making in our related — blog post - AI-based end credits detection automation to boost viewer engagement.

"time_markers": [

{

"type": "guaranteed",

"frame_no": 9903,

"content_id": "1"

},

{

"type": "advanced",

"frame_no": 6757,

"content_id": "1"

},

{

"type": "safe",

"frame_no": 21349,

"content_id": "1"

}

]

Cognitive Mill output has one more unit with the additional time markers for more sophisticated configurations. But we are not going to consider it in this post. Let’s focus on the default values instead.

You can see the comparison of the Safe marker value and the markers set by a human below.

Our safe zone correlates with the manually-created markup. We try to offer the maximum level of security to the business we are integrating with. That’s why our system leaves some specific animations that are not a part of the content but are still attributed to it. Or the system detects a target block a bit later. But, as you can see, the possibility of falling out of the safe zone is practically excluded.

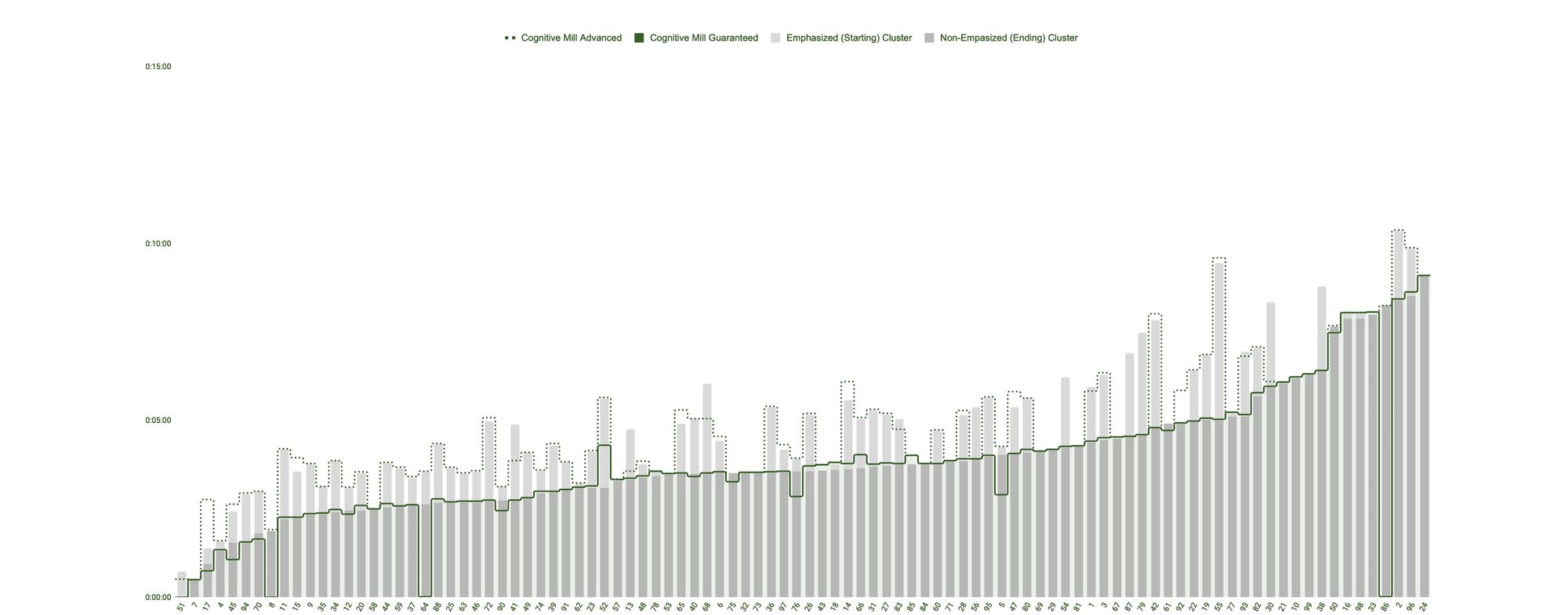

Let’s now take a look at how Cognitive Mill Guaranteed and Advanced values act on the present dataset.

We've sorted the movies by the duration of non-emphasized clusters. You may notice that our Guaranteed number quite accurately shows the transition point to non-emphasized credits, which are no longer of interest to viewers. In conjunction with the safe number, they can be the basis for the automatic redirect of a user to the next asset.

The only closing credits sequence misdetected by our service had a very low resolution but was detected by Amazon on the contrary. In general, we have different approaches, so you can see that the results vary in some points.

There's a lot more for you to learn!

The Advanced value in this particular setup (dotted line) covers the majority of the emphasized blocks. You can see that the percentage of early false positives is minimal.

We’d like to underline once again that we can grant more freedom to the Advanced mode if it is required by a customer. But in any case — our main strategy is to stay safe when interacting with customer’s content.

As you already know, Cognitive Mill is a Cloud platform that understands video content like a human does. This is a composite AI core that’s extendable to many use cases from the Media and Entertainment industry. We collected those use cases year by year which lead to the creation of granular, precise solutions. We create the products that deliver informed business decisions by sorting scattered media metadata out and making it perfectly clear.

Key outtakes:

- We compared Amazon Rekognition’s end credits detection functionality with the EndCredits Detection SAAS of Cognitive Mill. For that, we used a movie dataset provided by Beeline. The dataset was marked up manually for comparison accuracy.

- When using Amazon Rekognition, a considerable amount of content might be cut off from a viewer's experience if you take the initial detection marker.

- This happens because in the majority of cases Amazon’s service reacts to the first appearance of any text that looks like a closing credits sequence.

- Besides, the final credits clusters retrieved by Amazon appear inconsistent which means that not all the credits are being detected.

- Cognitive Mill EndCredits Detection SAAS analyzes the visuals, their dynamics, relevancy, and a subjective level of interest. The result is consolidated by the intelligent AI-based decision block that aggregates all the knowledge retrieved from visuals.

- Our platform aims at restoring a realistic pattern and content structure around a final credits sequence. You won’t miss a post-credits scene, bloopers, or creatively designed leading actors’ credits if it is a part of a story.

- By using Amazon’s Rekognition product you get some detections that you don’t know what to do with. But when using Cognitive Mill you get just one timestamp to make an informed viewer-oriented decision.

- In this experiment, we aimed to show that the understanding of the Media and Entertainment industry needs and a point-wise technology application brings much more value to a customer than just bare detections.

- By creating reasonable action items in the right places, you can facilitate a seamless viewer journey that will create an unmatched experience.

- All that you are going to need is a ready-to-go timestamp delivered by Cognitive Mill EndCredits Detection SAAS.

Have any thoughts on this article? We will be delighted to hear them out! It can bring a lot of value to our further product development. Reach out to us at support@cognitivemill.com.