Why deep learning is not enough for video analysis automation

Deep learning (DL) is the core of artificial intelligence, much spoken about and often overestimated.

As David Ferrucci, the lead investigator at IBM’s Watson stated, the impressive results of AI at narrow tasks made people generalize it and mistakenly believe that it can successfully solve other problems too. Taking narrow AI for general intelligence caused disappointment in DL because of its inability to understand what it tried to analyze. But it wasn’t designed for it — that was the point.

And this is the reason why all these memes appear and travel from one social media network to another.

Speaking about deep learning, we definitely mean deep neural networks (DNNs) with a sufficient number of node layers. So its “depth” is in the number of layers and not in deep understanding as it may seem at first sight. To be considered a “deep” one, a neural network must have more than three layers: an input layer, 2+ hidden layers, and an output layer.

A DNN can be faster and more accurate than a human in solving tasks that can be described with a finite number of mathematical rules. But at the same time, it’s complicated for it to deal with tasks that people easily solve intuitively, based on their general knowledge and understanding of the surrounding world.

In this article, I will show you how we at AIHunters use deep learning on our AI-based platform for cognitive business automation called Cognitive Mill™. Why, for some of our daily tasks, DL is reliable, and for others, it requires additional cognitive solutions.

And we are 100% sure that deep learning is not enough to fully automate video analysis.

But first, let’s start with the tasks for which DL is a good and reliable tool.

Deep learning does well

DL proves to be reliable for narrow tasks.

Deep learning finds hidden patterns in videos, images, and audio content. It also maps data to a lower-dimensional space, thus making it easier to operate with.

Neural networks are strong at face recognition, object detection, natural language processing, text recognition, etc.

Let’s take a closer look at these tasks.

1. Mapping the data to a lower-dimensional space

Say you want to analyze a picture with a resolution of 1280x720. It consists of a bit less than 1 million pixels, and such data is hard to process. DL makes it possible to represent a raw input image in a compressed and abstract mathematical way as vectors.

So a convolutional neural network (CNN) takes the input picture, passes it through the hidden layers, extracts features, and generates a more digestible representation of it, which is easier to process and analyze.

2. Voice recognition and natural language processing

Deep learning shows impressive results in voice recognition and natural language processing; take virtual voice assistants, for example.

DL is sufficient for basic speech-to-text transformation and identification of the topic and keywords in the video. This is a narrow task.

And this was the goal of the first version of our text summarization module based on audio analysis, meant to be the first step toward the creation of a pipeline able to perform the full semantic audio-visual analysis of any video content.

But it is insufficient if you want to make sure the transcribed text doesn’t contain inner contradictions or lack critical information. With neural networks only, we are not likely to perform this type of analysis and get trustworthy results, especially working with such diverse and complex data as we do, so we are enthusiastic about trying to apply ontology.

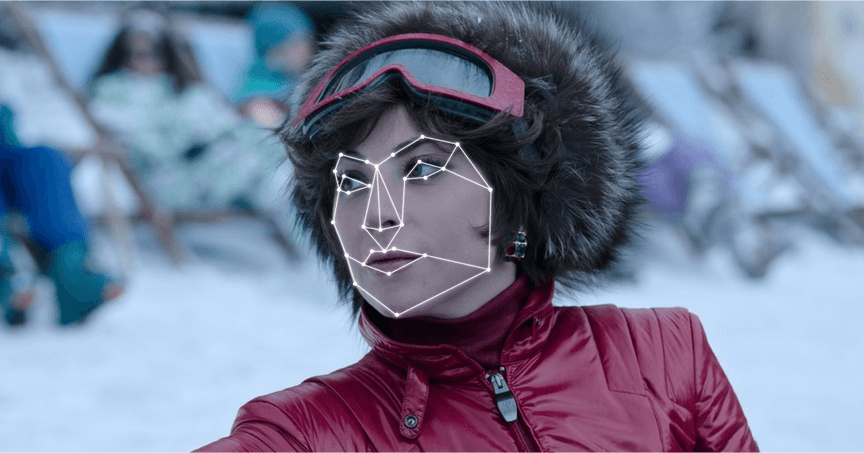

3. Face recognition

Deep neural networks are the best solution for facial recognition. Today’s state-of-the-art models can outshine many people’s ability to remember and recognize faces, especially when we mean hundreds of faces. A pre-trained neural network is more reliable in this regard. It detects each human face in the video and describes the faces as vectors, which makes it possible to unerringly recognize any of the faces in different scenes based on these descriptions.

This is what we use in Cognitive Mill™.

Each detected face in a video gets represented as a vector, a descriptor containing unique features necessary to identify the character.

But that is just the first preparatory step for the main action. And then, the custom combination of classical machine learning (ML) and mathematical algorithms is used to perform the following key part:

1. Provide an ID for each main and secondary character.

2. Analyze and decide if the character is main or secondary, and exclude extras and other characters of no importance.

3. Identify and mark a representative frame for each main and secondary character.

4. Find all scenes in the video timeline where the identified main and secondary characters are present.

DL does only the narrow recognition task (“eyes”), which includes face detection and facial recognition, while the most complicated decision (“brain”) part goes without it.

Is deep learning alone enough for cognitive business automation in the Media and Entertainment industry? Can DL perform quality video analysis in fully automated mode?

The answer is NO. Let me show you why.

Beyond DL’s abilities

Making decisions

The key point is that deep learning is weak at decision making. That is if we speak about a complex problem, not a narrow specific task, such as facial recognition or object detection.

Neural networks have no idea what they recognize and classify.

If we could train a neural network to understand what it is classifying and consider the context, we would end up in an ideal world. But the reality is harsh: neural networks have no idea what they recognize and classify.

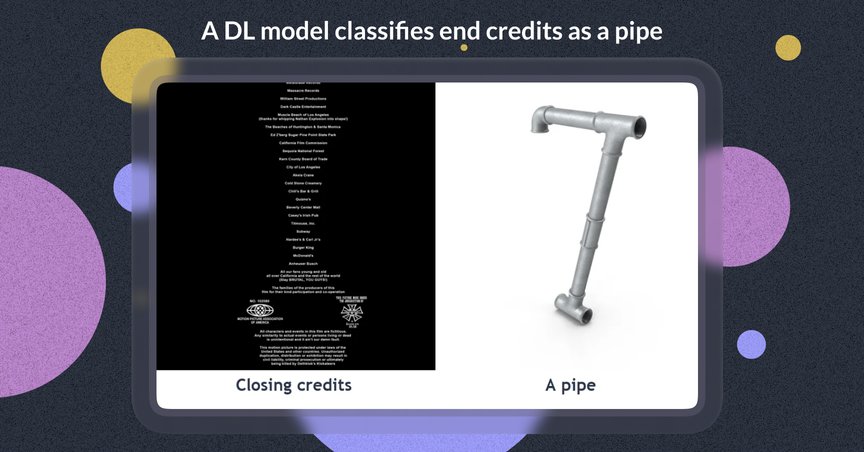

Our AI team tried detecting end credits with our Cognitive Mill™ pipeline, using deep learning alone. The image with closing credits only was passed through a DL model capable of object detection.

And a model that can recognize a cat in the picture classified the text of end credits as a pipe…

Another model identified closing credits as a website with almost 60% probability:

Predicted: [('n06359193', 'web_site', 0.5840247), ('n07565083', 'menu', 0.14356858), ('n04404412', 'television', 0.092642605)].

We use a neural net for object detection, not a text detection model as the latter wouldn’t solve the problem successfully because text detection does not equal closing credits detection. The purpose of this pipeline is to identify and mark the parts in the video timeline that can be safely skipped, with the guarantee that viewers won’t miss anything interesting. No text detection or text recognition model can guarantee such safe skipping.

We know the context, we expect closing credits at the end of the movie, and we can recognize the credits, but a neural network is limited to its dataset that cannot embrace everything. When a DNN fails to find the content it knows, it may see things that are not there. These limitations lead to such ridiculous decisions, as shown above.

In our approach, deep learning is never responsible for making decisions.

As we divide the process into two stages — the Representations stage and the Cognitive decisions stage — DL is used only during the first Representations (aka “eyes”) stage.

It does the feature extraction stuff, the detection and recognition tasks if required, thus preparing the data for further cognitive analysis.

And any decision is our unique cognitive recipe made up of mathematical algorithms, computer science, classical computer vision, machine learning, machine perception, and cognitive science to imitate the work of a human brain.

Be like a human

This is similar to creating a chef’s recipe. All the ingredients are known, but only a custom combination of them gives amazing results.

Watching a video, we don’t even notice how many simultaneous processes are going on in our brain. But our neurons are working hard, so we can recognize, recollect, analyze, memorize, and feel various emotions.

Considering this point is critical if we want to automatically generate a movie trailer or a sports highlight reel. This is a psychological aspect of cognitive automation — to know what sounds, scenes, actions, environment, etc., can arouse interest or vivid emotions, excite, surprise, or be just pleasing to the eye. It’s necessary to find the moments, the sequences of scenes that would trigger the human mind and inspire the viewers’ wish to watch the whole movie or game.

It was the major concern of our AI team when creating a pipeline that selected scenes for a movie trailer generation.

And know what? Deep learning cannot solve this problem!

Neural networks can operate with objects and representations, but they cannot associate these objects and representations with an emotional state of a person. They do not understand that a rapid movement attracts your attention, as well as they never know that an unexpected action causes excitement, surprise, or fear.

Keeping all that in mind, our AI scientists had to find out what other technologies could complement deep learning so that the module could automatically provide the desired output.

Content analysis, search for characteristics of scenes that could trigger the mind’s response, and the ways how to describe these characteristics using numbers — all this is similar to creating a chef’s recipe. All the ingredients are known, but only a custom combination of them gives amazing results. The team turned to classical computer vision, used machine learning algorithms, and many more. Creatively combining good old mathematical components to imitate human perception, our AI scientists got the output that met their expectations.

Along with the above, it’s also worth mentioning that deep learning cannot be used to imitate human focus, as we do in Cognitive Mill™.

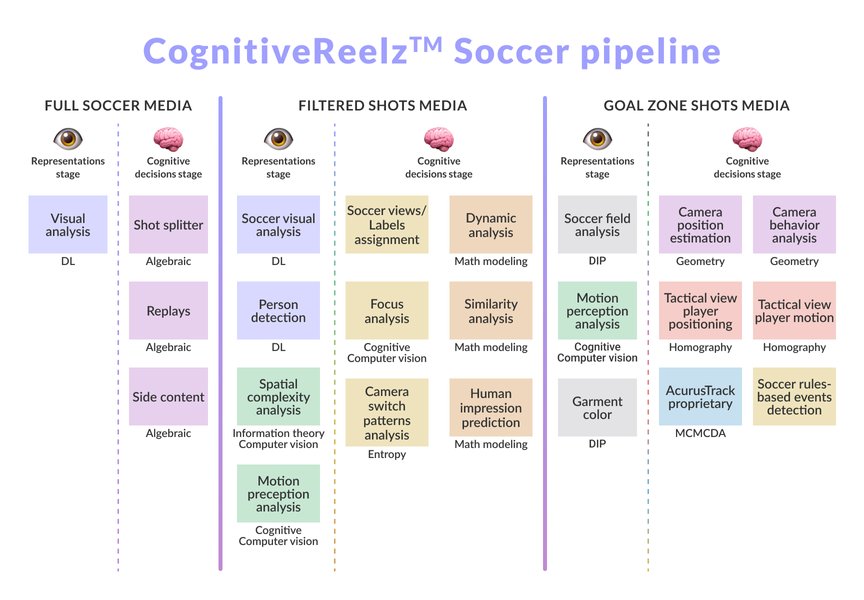

To sum it up, I want to highlight that only one Cognitive Mill™ pipeline is based on DL alone — the first raw attempt to make a text summary. All the rest use deep learning only at the first Representations stage. And here’s an example of one of our sports pipelines.

Just compare the number of components. As you see, a meaningful analysis of a soccer match requires many more technologies combined than deep learning alone. But without DL, the Representations (“eyes”) stage, such analysis would not be possible.

Deep learning is an essential element of a pipeline, but neural networks are not enough to imitate human intelligence.

In conclusion

1. Deep learning is good at representations but isn’t trustworthy in decision-making.

2. Neural networks deal well with narrow tasks that they have been trained for but cannot solve complex problems.

3. It can operate with objects and representations but can’t consider the context and, moreover, the psychological aspect.

Deep learning gets stuck dealing with tasks that require an understanding of meaning and context, reasoning, and a common understanding of the world, the core knowledge every human learns from the first moments of life.

DL models can be really good at finding patterns and mapping tons of data, but they don’t understand what lies beneath these patterns, which causes mistakes.

Well-known AI scientists keep on disputing the future of deep learning.

Maybe neural networks will level up to System 2, which Yoshua Bengio, one of the “godfathers” of deep learning, believes and works on. Maybe DL will get more accurate and refined in solving its current tasks, but to deal with abstract knowledge, we would need a new form of machine learning, as Gary Marcus sees it.

Anyway, we at AIHunters keep on dreaming big and working smart in order to develop the decision intelligence of Cognitive Mill™ so that it can reach the human level of perception and understanding using deep learning but not limited to it. So that cognitive business automation is not a combination of buzzwords but a technology that optimizes various complex processes. What if we could teach AI reasoning, learning from the environment, memorizing, and updating the information learned?

But our brave plans deserve another story.

This is the AIHunters' way and philosophy. We don’t claim it to be the ultimate truth, but this theory is based on our experience and is experimentally proven. We welcome everyone to try out how it works at run.cognitivemill.com.