What is Cognitive Computing?

Cognitive Computing can very well be the most used word on here.

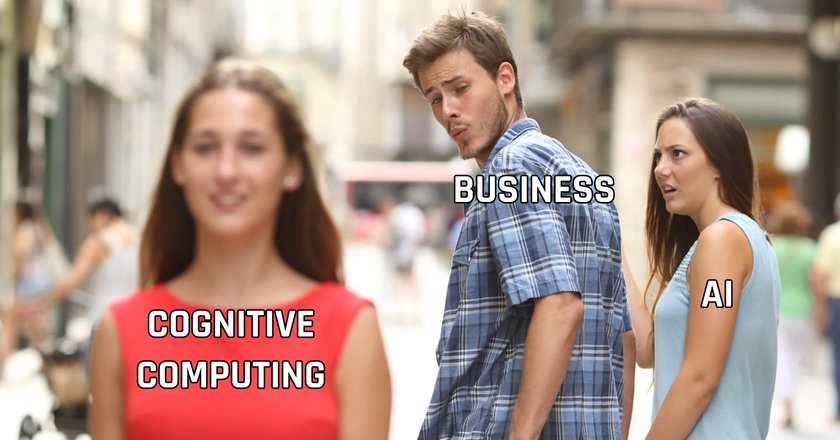

Cognitive Computing is perceived as the AI in the world where we already have AI, but it’s not good enough. It’s the new buzzword that everyone in the industry has on their mind.

But when it comes to establishing the term itself, we limit the explanation to the most general interpretation.

Today, let’s dive in a little deeper to try and end confusion tied to the notion.

What is Cognitive Computing?

The answer is simple.

It’s what helps us do our job.

It’s like the AI we have known from movies, TV, and books, but in real-life the AI is much simpler than that.

Artificial Intelligence has become the victim of its own fame.

Let me explain.

The hype around the term is mostly rooted in the “Artificial” part. Creating something that could think and was not human was a big thing. And it does not matter that much how profound the “Intelligence” part is.

And that excitement carries on as the technology continues advancing, even if the advancement itself isn’t particularly groundbreaking.

So here’s what has happened: we got AI that can see oranges and read, but we also shoot movies about AI being omni-intelligent. But it’s not. It still sees oranges and reads.

Yet Cognitive Computing better fits the description.

But jokes aside, here’s the definition of Cognitive Computing.

This is a concept carried out by a particular set of technologies aiming at simulating human reasoning. That allows it to solve more complex, uncertain, or ambiguous problems.

Wanna know more about Cognitive Computing?

While AI imitates human intelligence, it draws decisions by learning from the environment, training and getting better as it gets more information.

But the ambiguity is where Cognitive Computing surpasses AI in efficiency. When the solution to the problem is uncertain, when there isn’t enough data for a strong conclusion — Cognitive Computing can understand context to solve that problem.

Cognitive Computing employs a plethora of tools to gather the most information. Those may include:

- Natural language processing;

- Data analytics;

- Pattern recognition;

- Machine learning;

- Image processing;

- Object recognition.

Essentially, the system is meant to replicate the way the human brain works, but faster. Despite the nuances of all these neurons and whatnot, computers exceed us at data processing speeds.

The applications of Cognitive Computing

You might ask: where can we use Cognitive Computing to our advantage?

The answer is very simple.

Got some — or a lot of — data that you don’t want to go through?

Get Cognitive Cloud Computing to do that for you. It will give you accurate insights, make decisions for you, and, what’s more important, spare you from the tedious work.

So, you can use it almost everywhere. Here are a couple of most prominent examples.

Healthcare

Healthcare workers spend a big portion of their time managing and analyzing the patients' data to diagnose diseases and implement treatments.

But that analysis is prone to mistakes, just like any other thing that we do, right?

Right.

But we can implement a system powered by Cognitive Computing that would analyze any bit of data regarding a patient's health records, their test results, and available medical research to help with a diagnosis.

The system continually improves its pattern recognition capabilities to anticipate problems and enable hypothesis generation. This way, it could model feasible solutions to the potential or appearing problems, facilitating the treatment.

So, a Cognitive Computing diagnosis system would make a brilliant assistant for medical workers.

Cybersecurity

Security resembles healthcare to some extent.

In a sense that it’s an eternal arms race.

On the one side, we have attackers who compete in building the most sophisticated malware, and the other side has security companies building protective measures.

And they just go on and on in a cycle of one-upping each other.

But as of late, cybersecurity has one more trick.

Cognitive Computing can provide a way to detect suspicious activity as quickly as possible. Analyzing the web requests, it creates a baseline of what normal traffic is supposed to look like, and then looks for a malicious activity that bypasses security protocols through standard, encrypted, and anonymous channels.

Apart from analyzing the traffic, Cognitive Computing can pull the data from internet-wide anomaly scores to better distinguish between benign traffic and malicious activity.

Manufacturing

Manufacturers have a lot of things to worry about in order to keep the production running as effectively as possible.

They have to make sure that their equipment is running, their resources are used effectively, the products are of required quality, and the workers are safe.

Learn how you can automate your business.

And when working on a large scale, managing those challenges becomes increasingly harder.

Now we have a better way of handling those challenges: with the Internet of Things finding its way into production facilities, manufacturers can apply Cognitive Computing to analyze the data collected by IoT devices and use it to their advantage.

For instance, Cognitive Computing can apply predictive maintenance based on historical data, and then charge it with holistic techniques to figure out how effective the equipment is.

Then it can find information on how the potential issues have been resolved previously. And then it recommends action on handling the problem.

In addition, Cognitive Computing allows for modeling the equipment behavior in emergency situations, which helps with handling such issues should they arise.

Cognitive Computing pros

Now, let’s sum up the benefits you can get with Cognitive Computing instead of sticking to human labor:

- Accurate analysis. Cognitive Computing provides more meaningful insights because, unlike AI, it can work with a larger selection of data sources and analyze context.

- Speed. You get human-like analysis, but with the speed of a computer. Well, it’s the computer that does it all, right?

- Scalability. If you have a big number of tasks to handle, you have to hire more people to do the same job. With automation systems powered by Cognitive Computing, scalability comes at a much smaller cost, with no losses in terms of speed.

Cognitive Computing cons

But what will you be paying for all that great stuff Cognitive Computing offers?

- Security. Automation is all about using large amounts of data to facilitate decision-making. In order to not compromise the system, you will have to protect the data more thoroughly.

- Development cycle. Cognitive Computing systems are quite complex and require a longer development cycle in the hands of experienced engineers.

- Adoption rates. The complexity of technology and its development translates into slower adoption rates. And of slower adoption, smaller companies with less budget to spare can’t afford the expensive tech and tend to avoid it, therefore slowing the adoption further.

Cognitive Computing in media and entertainment

But let’s be clear here.

We are not doing logistics and healthcare.

There are more use-cases for media industry.

We are about the media and entertainment industry, which can also benefit a great deal from automation.

You see, when it comes to making content for the media industry, you can have two types of jobs: the one where you create great things, and the tedious part.

Shooting a movie is a creative process: a whole crew of people works on a script, scouts for locations, makes costumes and props, hires the cast, and then shoots the movie.

But when you have a lot of content in your library, making trailers for like a 100 of them is not fun.

You just have to sit there and edit for who knows how long.

That’s where Cognitive Computing comes in to help. It analyzes the footage, understands its context, distinguishes the most important scenes, or characters, and then cuts the trailer automatically.

It’s not just a compilation of random shots. The technology can get the sense of the narrative, so that the trailers come up cohesive.

And all that happens in a matter of seconds.

Here are a couple of other examples:

- Highlight generation. Cognitive Computing can automatically cut the best moments from sporting events.

- Smart ad insertion. Knowing the context of the footage, the system can serve better targeted ads to viewers.

- Credits skip. The technology can tell apart the movie from its opening or closing credits, allowing the viewer to skip past those to the next piece of the story. No post-credits scene is left behind.

- Cast management. Hit a pause button while watching a movie, get the info on everybody on the screen: characters’ names, cast members, and so on. No more guessing. What is more, Cognitive Computing differentiates main characters from the secondary ones, realizing their relation to the plot.

- Nudity filtering. Automatically keeps the viewers safe from inappropriate content.

- Vertical crop. Cognitive Computing solution crops the landscape footage to portrait aspect ratio. And you don’t lose anything: the frame focuses on the most important part of the scene.

Thinking of automating your content production? Reach as support@cognitivemill.com or use the form below. We will answer all of your questions and see how we can help.