NVIDIA Triton Inference Server for cognitive video analysis

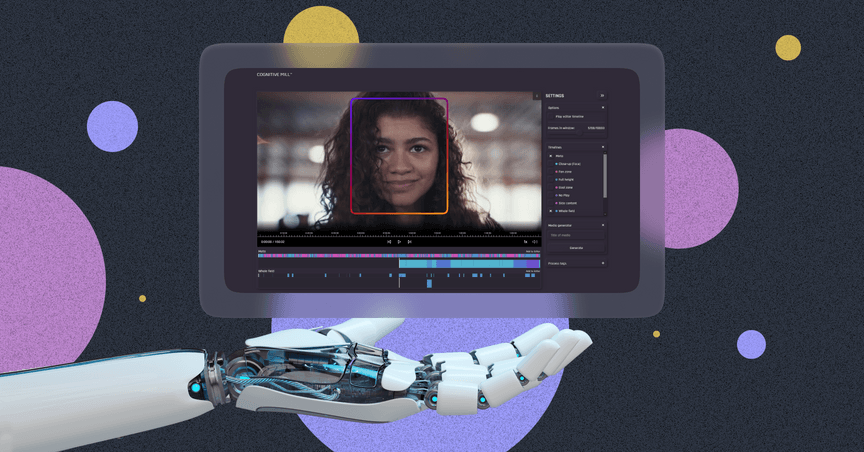

We are AIHunters, and we work in the field of cognitive computing. We created a cognitive cloud platform that can analyze video content with human focus and automatically generate human-like decisions. That is why we often call it a virtual robot.

For over a year of our startup’s life, we have had considerable achievements and challenges that we managed to overcome.

In this article, I’ll focus mainly on the problems we faced and how the NVIDIA Triton Inference Server helped us win at performance and cost-effectiveness.

But first, a few words about who we are.

A few words about us

Our AI scientists used best practices of machine learning, deep learning, and computer vision. But the main focus has always been on cognitive science, probabilistic AI, math modeling, and machine perception. The challenging task of analyzing huge amounts of multi-layer data with high variance required even more. The team found working combinations of the above-mentioned technologies and created unique algorithms that made the machine not only smart but also strong at reasoning and able to make adaptive decisions.

The AI team created more than 50 custom algorithms and components that were implemented in 16 cognitive automation pipelines and innovative products.

We’ve built docker containers for each AI model with Python or C++ code, so each deep neural network (model) is inside of an independent module. It made our cognitive components reusable and gave us the freedom to combine them in different ways to cover various use cases, and we orchestrate them with Kubernetes.

We use Kafka journal to register platform events.

In pre-processing, we use DALI (NVIDIA Data Loading Library), which is an effective tool for DL model training.

For inference, we use Amazon GPUs.

Flexibility and scalability matter and the AIHunters team does everything possible to make sure Cognitive Mill™ has both.

Our challenges

Highly flexible, scalable, and showing an almost human level of understanding, the system is still not perfect. There is always room for optimization.

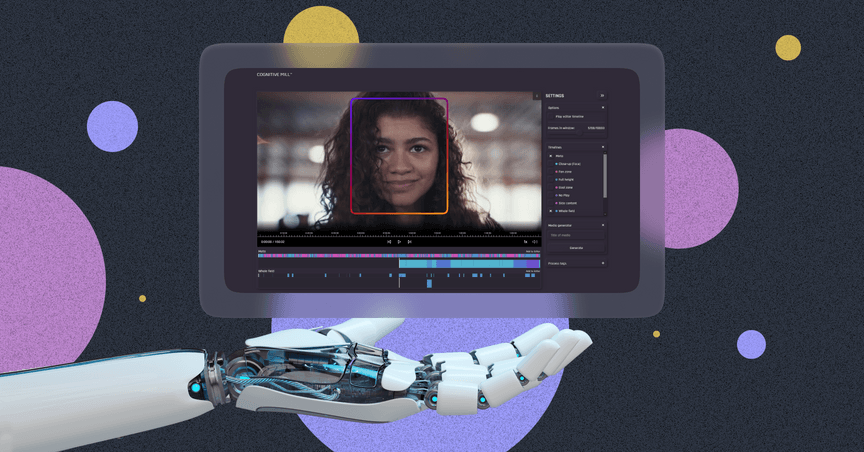

On our way, we had a number of challenges. One of them was the long initialization time. The other problem was GPUs underload.

Long initialization time

Model initialization and loading on GPU took a considerable part of the inference time.

Weight loading is time-consuming. We ran the service that initialized models every time for each segment, which was especially noticeable when the segments were short. The more segments, the more often the initialization took place.

Low GPU utilization

The expensive GPUs experienced underload as they had to wait for the pre-processing to complete before starting to do their job. After the inference, the GPU returned the results and waited for the next batch.

NVIDIA Triton Inference Server Integration

Creating our own solution from scratch in the world of so many ready-made alternatives was like reinventing the wheel. But we needed reliable software tailored for complicated video processing. There weren’t too many options. And we decided on the NVIDIA Triton Inference Server.

Triton is an open-source AI model serving software for inference and simplified DL model deployment introduced by NVIDIA, one of the top companies providing software for AI and deep learning.

It supports all major ML and DL backend frameworks such as ONNX, NVIDIA TensorRT, TensorFlow, PyTorch, XGBoost, Python, and others.

For orchestration and scaling, Triton integrates with Kubernetes.

It also allows running multiple instances of the same model concurrently or different models on GPUs, which helps maximize throughput and utilization.

But Triton cannot handle the video as it is, so we prepare it for Triton by loading, decoding, resizing it and forming batches.

So Triton was a good choice for us to deal with our challenges. The integration process definitely took time and effort.

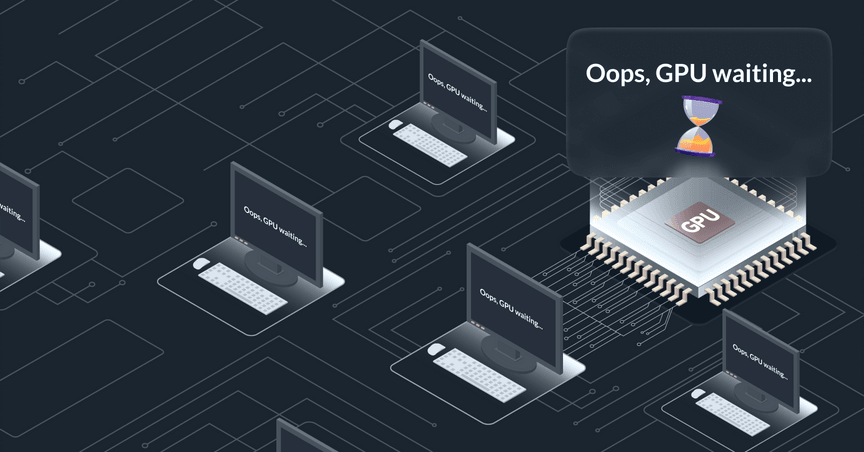

Triton integration affected Cognitive Mill™ in the following ways:

- Architecture. In the platform, Triton changed the way we scaled workloads.

Before Triton, there was a specified number of input segments and a configured number of services to process them.

With Triton, the scaling has changed. For the specified number of input segments, the configured number of services is created to pre-process the segments, then they call the Triton and save the features they get in the response. The services and then Triton are both scaled. The given number of services calls for a sufficient number of Triton instances.

For example, 4 services require 1 Triton instance for efficient load and performance.

The services call Triton via a load balancer that distributes calls between Triton server instances. - Code. In the codebase, we didn’t have to make drastic changes. We created a connector and optimized docker images so that they don’t contain extra libraries that are no longer needed.

- Models. Instead of calling the library, the model calls the connector that in its turn receives predictions from the Triton server.

It is also necessary to ensure that the model’s format is supported by Triton, and if not, convert it to one of the supported formats.

After the team deployed Triton with models in the cloud, their next step was optimization. And we decided to change the backend to TensorRT, that is a format, and a tool that helps optimize the model, making it faster.

The most challenging part of integration was related to models. Our models had different formats, and not all of them were supported by Triton. To make them compatible, we had to convert the model to the supported format.

The conversion requires special attention and quality control because model conversion may be tricky sometimes and make changes to the model itself. We developed evaluation criteria to measure if the model conversion was successful or not. In some cases, there were no ready-made solutions, and our developer team had to write specific model configurations.

TensorRT is also very sensitive to where the model was compiled, I mean, the model should be compiled exactly on the same graphics adapter where it’s going to be executed, otherwise it may refuse to work.

How Triton helped us overcome challenges

Cut down model initialization time

Instead of starting a service for each segment, we start one Triton server once. It initializes the models and keeps running. Then other smaller services that execute pre-processing of the segments call the server, get a response, and register the results.

But Triton initialization is quite a long process, so it’s important to consider that after it’s started it is continuously loaded.

We have implemented a server that initializes models once and is running and available all the time.

Optimized GPU utilization

With the implementation of Triton, we were able to separate inference and pre-processing and run two simultaneous processes in a separate service.

The idea was good.

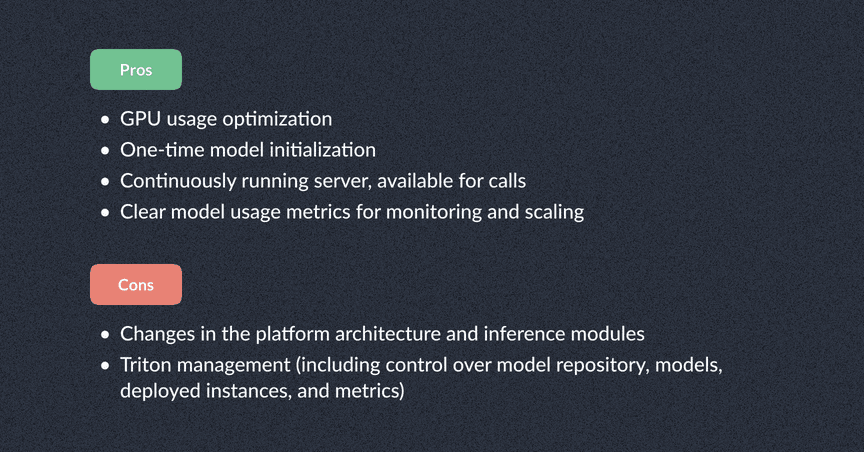

The advantage is that we have completely separated the pre-processing, which is run by machines with CPU, and the expensive GPUs don’t have to wait for the pre-processing completion.

Among the drawbacks are the above-mentioned changes in the platform architecture and inference modules.

Triton implementation also requires management and additional control over model repository, models, deployed instances, and metrics.

Possibly, Triton utilization may cause large amounts of network traffic between modules and the Triton server.

Models, if their format is not supported by Triton, must be converted to one of the supported formats, including the creation of the corresponding configs in pbtxt.

Tangible results of using Triton: profit in figures

We have just started using Triton Inference Server, and integrated it with one of our pipelines that performs end credits detection. But we have already felt the difference.

We didn’t win at video processing time; in general, it remained the same.

But we definitely won at scaling and increased the system’s throughput.

1. We’ve separated processes running on GPUs and CPUs. Now we can scale them separately.

2. With Triton, we’ve managed to optimize GPU utilization. And the expensive machines with GPUs don’t have to wait idly anymore, they work all the time.

Now we can utilize fewer GPUs and take more CPUs, which results in a significant economic advantage.

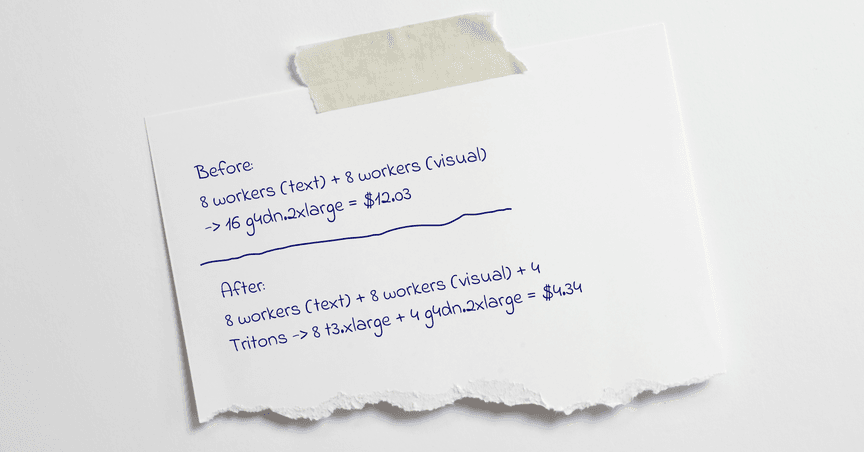

Here’s an example for clarity. Let’s review how much we spent on the processing before Triton and after.

Before:

For 8 workers with a common text detection model and 8 workers with a common visual recognition model, we needed 16 g4dn.2xlarge (Amazon GPU instances), which cost us $12.03.

After:

For 8 workers with a common text detection model and 8 workers with a common visual recognition model (the same number of workers) + 4 Triton instances, we need 8 t3.xlarge (Amazon CPU instances) and only 4 g4dn.2xlarge (Amazon GPU instances), which is only $4.34.

Thus, Triton integration allowed us to use 4 times fewer GPUs and pay 2.8 times less.

It was only our first iteration but the result is already impressive, and we are planning to integrate Triton Inference Server into other Cognitive Mill™ pipelines.