Imitating human attention

Attention is complex both as a concept in psychological studies and a process in the human brain. And it’s one of the key cognitive abilities that we start to use and develop from birth throughout our entire lives as it’s critical for survival and learning.

It has always been a challenging goal for AI scientists to find a reliable way to imitate human visual attention. Yet there is no unanimity among researchers and no one-size-fits-all approach to creating a perfect model.

Attention is a vast topic, so let’s limit it to visual attention and finally review it in the context of video analysis.

What is visual attention?

Visual attention in cognitive psychology is an ability to attend to specific stimuli in the environment while ignoring others. It is the mechanism responsible for the selection of stimuli that a person notices first, thus prioritizing the critical information and helping us neglect the irrelevant.

The main problem of perception is data overload: our retinas receive about 10⁸–10⁹ bits per second of visual information. Our visual cortex, a region of the brain responsible for incoming information processing, cannot deal with this number of representations at a time. This problem is known as the attentional bottleneck.

With its limited resources, our brain has to be selective.

So when it seems that we see everything, it is not actually true. Our attention focus switches from the most important to the less critical objects and events. It doesn't happen simultaneously but as a sequence of quick covert micro-actions.

Scientists usually identify the following types of visual attention mechanisms: space-based, object-based, and feature-based.

There are a lot of studies and disputes about the number of objects the human brain can simultaneously focus on. According to the experimental studies of X. Zhang, N. Mlynaryk, and others, our object-based attention can deal with two objects at the same time.

And there is no unanimity among scientists concerning what attention stimuli are dominant: feature-based or location-based. There are two popular opinions concerning the importance of position and features in visual attention mechanisms: position-special theories and position-not-special theories.

Position-non-special theories state that all features are equivalent, which means shapes, colors, and locations all have equal chances to become primary stimuli.

Position-special theories posit that selection by position is more direct and basic than that of form and color.

One of many classifications of attention is the distinction by data processing types.

There are two main types known as bottom-up and top-down processing.

- Bottom-up processing is a fast and primitive sensory-driven mechanism. It starts with external stimuli, salient objects in the environment that we perceive unintentionally without prior knowledge about them. Perception comes first and initiates a cognitive process.

- Top-down processing happens voluntarily with purpose and prior knowledge, experience, expectations, etc. Cognition predisposes perception, so we can easily ignore salient stimuli, focusing on a target object or event.

There are two major theories that explain how these attention types function and can be imitated: the “Feature-integration theory” (FIT) proposed by A. Treisman and G. Gelade in 1980 and the “Guided Search 2.0” developed by J. M. Wolfe in 1994 as a reaction to FIT.

The FIT theory states that there are two stages of attention:

1. The preattentive stage is when the low-level features such as color, luminance intensity, orientation, etc., are extracted. The bottom-up mechanism starts before the cognitive process begins.

2. The focused attention stage is when the features are combined to form a recognizable object in our brain. Then the top-down mechanism starts.

In his “Guided Search 2.0” model, J. M. Wolfe shows that both — bottom-up and top-down attention mechanisms — start simultaneously. The information received from organs of perception gets prioritized.

Both theories are still being debated. But the majority of models are based on the FIT theory.

As we are interested in understanding and imitating the visual attention of a human watching a video with limited time to examine the scene, we’ll mostly refer to bottom-up attention.

Our bottom-up attention is driven by saliency, an attribute of an event or object that makes it prominent and contrasting to the environment.

What attracts our attention?

Salient objects are what we notice first. But what makes an object or a part of a visual field salient?

We naturally attend to stimuli that represent a surprise for us. Humans are likely to notice anomalies, something that pops out.

So we quickly notice sudden movements, unexpected sounds, or flashes of light. Human attention mechanisms are sensitive to motion. The medial superior temporal area of the human brain is responsible for motion detection. A moving object is more salient than a static one.

Our bottom-up attention is attracted by stimuli that contrast the environment in color, luminance intensity, size, orientation, spatial frequency, etc.

Among salient features, color and luminance intensity prove to be the primary attributes to draw our attention. Color information is among the first to be processed by our visual system, and it is considered intrinsically salient.

Unlike color, which is absolute, size or spatial orientation are relative characteristics requiring other objects of a different size or orientation to compare.

But we rarely deal with isolated features in reality. To recognize even a single object, we have to perceive it as a combination of features bound together. Thus it becomes a more challenging task.

Attention mechanisms have evolved over centuries, so we have a lot of inherited ones.

The human mind is inquisitive by nature, and we are constantly learning from the environment. So our perception system is tailored to notice and analyze new, unfamiliar information to enhance our awareness of the surrounding world.

If you look at the picture below, what do you notice first?

We attend to something that we perceive as a potential danger. As biological species, humans also tend to prioritize fear-relevant stimuli.

Even today, we immediately respond to the stimuli that used to threaten the survival of our ancestors. Although nowadays, these things are not dangerous for us anymore, they will be the first to draw our attention. Humans evolved this mechanism to survive, and it has become hard-wired. That is why you probably saw the water snake before anything else in the picture above.

With this inherited feature critical for survival, we immediately notice and identify animals.

Our attention is also primarily attracted by people. We are involuntarily attracted to human faces, bodies, or body parts. We have a specific area in our visual system responsible for face recognition. It’s called the fusiform face area (FFA).

The list can go on forever; that's why I included only a couple of most explicit examples of stimuli that drive human bottom-up attention mechanisms.

How to imitate visual attention?

After all the studies, our brain is still a mystery without a comprehensive guide explaining how it works. So it’s a great challenge for computer vision systems and deep neural networks to model a human-like level of visual information processing and understanding.

Imitation of human visual attention mechanisms is called visual saliency prediction (or eye fixation prediction). It is based on the knowledge received from neurobiology, cognitive psychology, and computer vision.

But it’s not always easy to identify the salient object. Let’s take a look at the following challenging cases:

- A scene may have many salient objects at a time so it’s hard to decide which one is more salient.

- A semantically salient object may be placed in a motley or multicolored environment.

- An object may be located in a low-contrast environment.

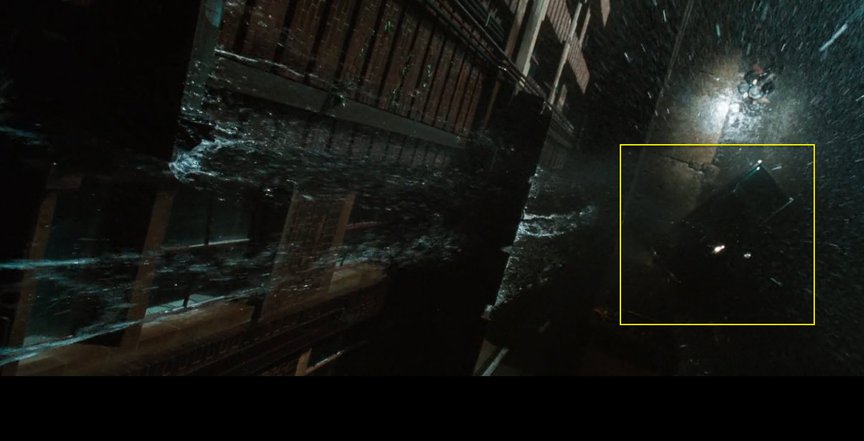

Below is an example of such a challenging case. It is quite complicated to identify the car in the scene from “The Matrix”. The car is small, it doesn't contrast the dark street and the floods of water streaming from the buildings look even more salient than the car itself.

Saliency detection is the process of locating the most important parts of an image that attract human attention using computer vision algorithms, deep neural networks, and more.

One of the most common methods that salient detection models are based on is the generation of saliency maps.

At the early stages of image processing, feature maps are built on the basis of extracted features. Then these maps are put together to generate a saliency map that shows the most outstanding areas in the scene.

According to L. Itti and C. Koch, a saliency map is “an explicit two-dimensional map that encodes the conspicuity of objects in the visual environment.” Saliency maps are widely used in computer vision and deep learning models to define the region of interest within a visual field.

Generating static saliency maps for an image is not always simple for today’s models.

But it’s even more complicated and expensive to create motion saliency maps essential for video analysis. It is so because, in the first case, you need to analyze just one frame, and in the latter case — multiple frames. But to create a Spatio-temporal model, we need to fuse both static and motion saliency maps.

The main problem is to find an efficient way to extract features of the most salient objects within moving scenes.

Let’s review saliency detection methods within the two most popular and fundamentally different fields: deep learning and classical computer vision.

They offer a great variety of approaches for static saliency detection.

One of the classical examples is the saliency-based visual attention model, introduced by L. Itti and C. Koch in 1980.

An example of deep neural networks solving the saliency detection task is SALICON (Saliency in Context), introduced in 2015 and aimed to narrow the semantic gap. It deals better with high-level features but may miss low-level ones.

In 2020, A. Kroner, M. Senden, K. Driessens, and R. Goebel introduced a new Encoder-decoder network architecture that allows it to make predictions on the basis of high-level semantic features, unlike the earlier state-of-the-art models.

Among computer vision-based methods, X. Hou and L. Zhang introduced a Spectral residual approach that does not require any prior knowledge about the object. The scientists proposed to inspect the properties of the background instead of the target object.

Another example of a saliency detection method is with the help of the OpenCV saliency module. The module includes three classes of saliency detection algorithms: static saliency, motion saliency, and objectness.

Motion saliency detection also offers a variety of methods, though the choice is limited compared to those of the static.

For instance, J. Xu and S. Yue introduced the Global Attention Model or GAM. They fused the static, motion, and top-down saliency maps to imitate how the human visual attention mechanisms work in real life.

R. Kalboussi, M. Abdellaoui, and A. Douik proposed a Spatio-temporal framework based on uniform contrast measure and motion distinctiveness.

J. Hu, N. Pitsianis, and X. Sun describe motion saliency map generation methods based on the conventional optical-flow model, motion contrast between orientation feature pyramids, and Spatio-temporal filtering.

The mentioned works are just a couple of examples of saliency detection methods.

There are so many approaches and combinations that including all of them in this article would be impossible. They all have strong and weak points — there is no perfect solution. So the selection of methods basically depends on the task.

Why imitate it at all?

The enormous amount of information that forces human attention to work as a filter makes it necessary to limit the region of interest to exclude the processing of irrelevant information for an artificial mind too.

In computer vision and deep learning, visual attention mechanisms are used for a lot of visual tasks such as image classification, object detection, face recognition, etc., in various fields.

When applied to the Media and entertainment industry, in particular, video analysis, imitating human attention mechanisms helps artificial intelligence focus on salient objects.

And salient objects arouse interest and keep viewers engaged. So this can be used for video highlights generation, to select the scenes with the most surprising, pleasant, or scary objects and events.

Another, probably more obvious, application of knowledge about visual attention is video cropping. The growing popularity of social networks such as TikTok and Instagram has increased the demand for cropping horizontal videos to the 9:16 aspect ratio.

Understanding human attention mechanisms allowed our AI team to create a cropping solution that automatically locates the most important part of the visual field within the cropping frame.

Taking some of the known ingredients reviewed in the previous sections, our AI scientists created a chef’s recipe to empower reliable decision-making.

All of the mentioned approaches have their strong and weak points, so the optimal solution is in the right combination of the best practices. And it most obviously depends on the result you want to achieve.

Deep learning is good at narrow tasks such as object detection or facial recognition, which is the early representations stage, but deep neural networks are still weak at making decisions. That is why, in cognitive automation, we cannot fully rely on DL.

DL, in combination with computer vision, math modeling, cognitive science, and more, allowed us to create universal modules that can be easily adapted and used in different pipelines for various business cases. And what is important for cognitive business automation — you get a predictable result.

Let’s refer to our crop solution shown in the video example above. It gives impressive results, yet not perfect.

The robot's attention may be shifted from the main object to the environment when the environment is more dynamic (shower, flames, background noise).

It may also focus on zoomed static objects and miss the ones in motion if they are smaller.

Abrupt movements can be ignored if they happen too fast, and the robot’s eyes may miss them.

But with the help of computer vision and math algorithms in the overwhelming majority of scenes, we managed to keep the most engaging part of the visual field within the cropping frame.

And this is only one example of various possible applications of artificially imitated human visual attention mechanisms.

We managed to put the accumulated scientific knowledge into practice and optimize the platform performance with CPUs and GPUs due to smart scaling, thus turning our SaaS into a cost-effective solution for cognitive business automation.

Stay tuned for more AIHunters’ experience stories, and try out our pipelines at run.cognitivemill.com.