Cognitive Mill on the way to full cognitive audio-visual analysis

The world is developing and moving at a crazy pace, and we are fed with more and more information: both useful and useless, that we can no longer sort and digest on our own. Human augmentation and process automation are no longer a luxury but a necessity.

AI-based technologies, such as natural language processing (NLP), speech recognition, and speech-to-text transformation have become integrated into our everyday lives. We use them without even noticing it when we get our emails sorted with smart email filters, search for information and only start typing the keywords and then pick the right option, communicate with chatbots and voice assistants.

Yes, the statistics, at least in the U.S., show that Siri, Google Assistant, and Amazon Alexa are very popular. And when you are typing a message on your mobile device, you don’t actually type every word, right? The predictive text functionality suggests the possible endings based on your previous messages. I’d better stop here, for the list is too long to continue.

Audio analysis as a must-have

The power of words, both spoken and written, is strong and must be considered even if you specialize in video analysis.

The automatically defined topic of the video, its keywords, entities (proper names) mentioned the most in the video timeline, and the summary of the text spoken — are all very handy. They help quickly get the key points and main concepts of the video without watching it. And it saves pretty much time and effort when viewers want to watch something worthy, dedicated to a specific topic, and need to make sure that the content meets their needs and watching it won’t be just a waste of time.

The technology is not new, and there are hundreds of apps that use it. But today it is the question of quality that comes first. The AI-based software that is able to better “understand” the content to provide a “human” text summary definitely wins the competition. This is what the AIHunters team is striving for.

This article is about the first Cognitive Mill™ pipeline that performs audio analysis of video content.

Developing an audio analysis branch

Before, our AI team focused mainly on visual content analysis when developing recipes for cognitive video analysis. Now, we decided to pay more attention to audio analysis, speech analysis, to be precise. It's going to be another step toward a complete audio-visual analysis.

With the introduction of this new pipeline, the Cognitive Mill™ platform gets the power to analyze audio content by transcribing speech into text, specifying the topic of the video, providing keywords and entities, and a summary of the transcribed text.

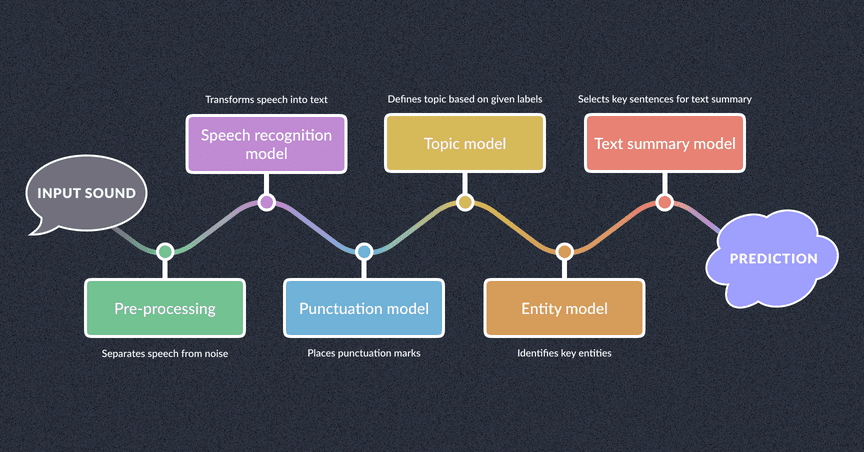

The Cognitive Mill™ text summarization process works as a traditional speech recognition pipeline, including a number of independent modules.

Speech and text processing turned out to be demanding. The new text summarization pipeline required more neural networks than any pipeline analyzing visual content.

One neural network performs speech-to-text transcription. Another one takes the script as input data and places punctuation marks. After that, the next neural net defines the topic of the video. The list of keywords is generated corresponding to the topic. The following model identifies key entities. And finally, the last neural network generates an extractive summary from the script.

As mentioned above, this is the first iteration where the analysis is based only on the audio content. It currently supports only English, though adding weights for another language is fast and easy.

Models and results

Models

1. First, the system extracts audio content for processing. Then the audio track is separated into voice (speaker delivering information) and noise (background music, street noise, etc.).

After that, the speech recognition neural net takes the voice component as input audio for inference and makes predictions.

The speech recognition neural net makes predictions based on phonemes, using the so-called lexicon-free approach. The latter, on the one hand, prevents us from missing the information that is not included in the lexicon, such as slang, abbreviations, proper names, etc. But, on the other hand, the absence of any check may result in including in the summary some misspelled words and even words that do not exist in the language but sound similar to existing ones.

2. The second neural network takes the input text generated at the previous step and places punctuation marks as the text summarization module is sensitive to punctuation.

3. The following model extracts the topic. According to the identified topic, a list of appropriate keywords is formed.

By the way, the topic of the video is not limited to specific labels, you can set any labels (topics), and the model will select the most appropriate one. This is possible due to zero-shot learning.

4. Then the next model selects key entities, proper names mentioned in the video.

5. And the final neural net prepares a text summary.

Results

We started with short videos up to ten minutes long for making the processing faster and getting more vivid examples. Here is one of them.

As a result, you get a JSON file containing the entities, keywords, summary, and topic of the video, as shown in the example below. The original spelling and punctuation are retained.

{

"entities": [

{

"Americans": "Nationalities or religious or political groups"

},

{

"Richard Besser": "People, including fictional"

},

{

"the University of Maryland": "Companies, agencies, institutions, etc."

},

{

"sixty percent": "Percentage, including \"%\""

},

{

"College Park": "Countries, cities, states"

},

{

"Maryland": "Countries, cities, states"

}

],

"keywords": [

"alcohol",

"defense",

"germ",

"bacteria",

"incubation",

"soap",

"sanitizer",

"virus",

"test"

],

"summary":

"In this season of flu and so many other germs were told again and again that hand sanitizers and soap are the best defense.

Last year, Americans spent more than a hundred and seventy million dollars on hand sanitizers alone, so we wondered what

works best and how do the sanitizers compar in a showdown with soap. It's something, noh, little odd about rubbing.

The key with hands sanitizers is to use enough of it. if you can't get to a sink, look for one with at least sixty percent alcohol,

but washing with soap any kind is better as long as you wash for long enough, Doctor Richard Besser, ABC news College Park,

Maryland.",

"topic": "health"

}

Despite the obvious imperfections, the result is promising. With the introduction of cognitive features, this pipeline can give much better results.

Among the challenges that we are planning to overcome are the following:

1. The script, as well as the entities, may contain misprints and non-existent words.

2. The speech recognition model doesn't define, and as consequence, the summary doesn’t reflect who says what. Now it’s a combination of key sentences of all that was spoken by all speakers together, which may be confusing when there are many speakers as in the news or a talk show.

The first problem requires an additional check like bounding external sources (dictionaries, online services) but keeping the balance between checking and the risk to erase the important information that cannot be found in these sources.

The second limitation is much easier to overcome with the help of speaker diarization.

Brave plans for the future

The AIHunters team has ambitious plans for the future enhancement of the existing cognitive computing-based video analysis pipelines and ideas about new ones.

Let us unveil some of them, starting with the less daring ones.

Along with the current output data, the text summarization module will soon provide chapters so that viewers can switch straight to the content of interest.

Speaker diarization and overall improvement of the pipeline are coming soon.

And we will no longer be limited to a specific type of input video as we are now because the speech recognition model was mainly trained on news, interviews, TV shows, conferences, etc. The pipeline will work equally well with movies, music clips, etc.

And to top it all off, the text summarization pipeline may be called a milestone, a missing step on the ladder to the AIHunters’ daring plan to create a pipeline that can perform a complete cognitive audio-visual analysis. Semantic audio and visual analysis is expected to give a really human-like level of perception and understanding.

And after that, the pipeline can be successfully integrated with the existing products, such as Cognitive Mill™, for example. The script will define the speakers by their voices and also recognize their faces and associate with the celebrities they belong to.

This challenging plan has become one step closer to reality with the introduction of the text summarization pipeline.