Cognitive Computing for the Media & Entertainment Industry Automation

Cognitive computing has been a much-discussed subject for quite a while. It is still strongly associated with IBM Watson, probably the first successful attempt to put the cognitive computing theory into practice.

Its main idea was that cognitive computing systems were created to make human-like decisions with the help of artificial intelligence.

The AIHunters team shared this idea, and that is why we decided to work in the field of cognitive computing. But our challenging goal — cognitive business automation — made us go further. As artificial intelligence tries to make machines smarter, cognitive computing aims to make smart machines decide like humans. To analyze complex video content, we need both.

Video is becoming the most popular type of content and yet the most complex, costly, and time-consuming when it comes to post-production workflows. The system has to correctly cut the video into completed segments and analyze the visual and audio content at a time. Creating a technology, able to quickly and accurately process, analyze, and make informed decisions in the fully automated mode was a promising and exciting task. And we’ve managed to deliver innovative solutions for video processing and post-production in the Media and Entertainment industry.

Why we chose Media & Entertainment

The Media and Entertainment industry is boundless, with tons of video material, which can be counted in hundreds of hours of tiring routine work performed by hundreds of people. And yet, it lacks automation that would help digest the oceans of daily produced video content and make its processing faster and more cost-effective.

Our AI scientists have come up with an idea on how to reduce, with the help of cognitive automation together with the unified and well-structured workflow, time, and costs of video processing and post-production.

It will give employees more time for performing creative tasks and deliver a breakthrough customer experience to the audience.

The AIHunters team has created a cloud platform for visual cognitive automation to watch media, make informed decisions, and take action instead of humans. It’s called Cognitive Mill™.

Let us know what you think!

With the help of deep learning, digital image processing, cognitive computer vision, and traditional computer vision, Cognitive Mill™ is able to analyze any media content. It can process customers’ videos, sports events, movies, series, TV shows, or news, both live streams and recorded video content.

Implementing cognitive computing the right way

Always thinking outside the box, our AI scientists knew that deep learning, with its current limitations, was insufficient for our daily tasks like reasoning and other adaptive decisions, which is critical for processing data with high variance. Neural networks are still limited to their teaching sets; even complex end-to-end deep learning pipelines can be the basis of cognitive automation only in theory. In real life, they cannot deal with tons of diverse video content.

We’ve combined best practices of deep learning, cognitive science, computer vision, probabilistic AI, and math modeling and developed an entirely new approach to video content analysis and decision making. We integrated science into modern digital technology to imitate human behavior by emulating not only human eyes but also human brains.

And now we can say that we have managed to create a cognitive computing system that is able to process complex video data.

Let us take a closer look at Cognitive Mill™, a cloud robot the AIHunters team has created, and how it works.

Cognitive computing is equal to virtual cloud robot

It all started with artificial intelligence concepts and philosophy.

In our approach to cognitive decision making we divide the process into two main stages:

1. Representations stage. The cloud robot watches media imitating human focus and perception. We use deep learning, digital image processing, both cognitive and traditional computer vision to emulate human eyes.

2. Cognitive decisions stage. The robot imitates the human brain’s work by making human-like decisions based on the analysis of the watched media. At this stage, we use probabilistic artificial intelligence, cognitive science, machine perception, and math modeling.

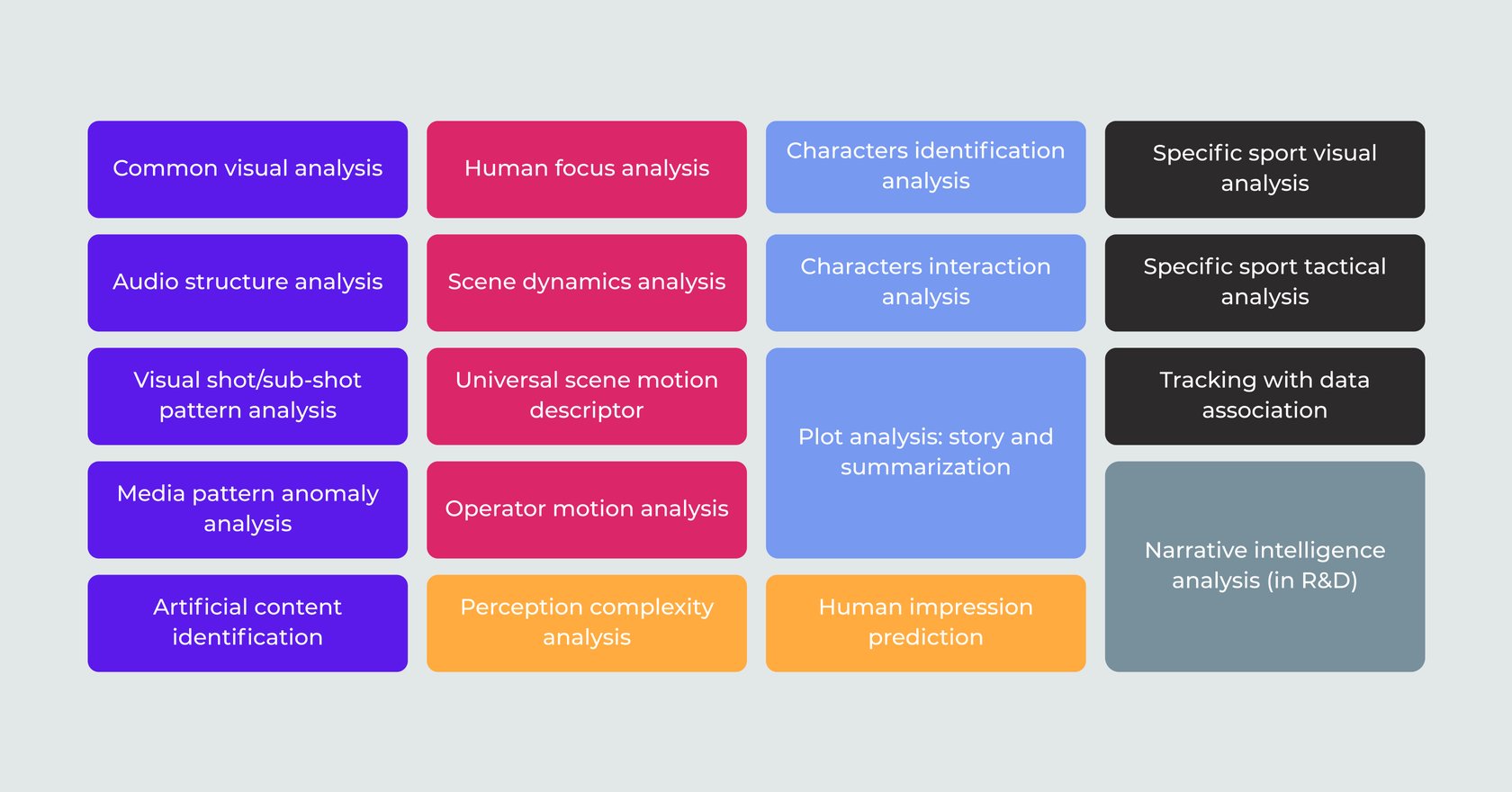

After profound research, our AI scientists have already developed more than 50 unique algorithms and components to lay a solid foundation for cognitive business automation.

Want to know more about our tech?

When creating our cognitive components, we keep them reusable by wrapping each of the human-imitating cognitive abilities into an independent module. This way, we can make different combinations to imitate various cognition flows for performing different tasks.

From AI algorithms to scalable business product

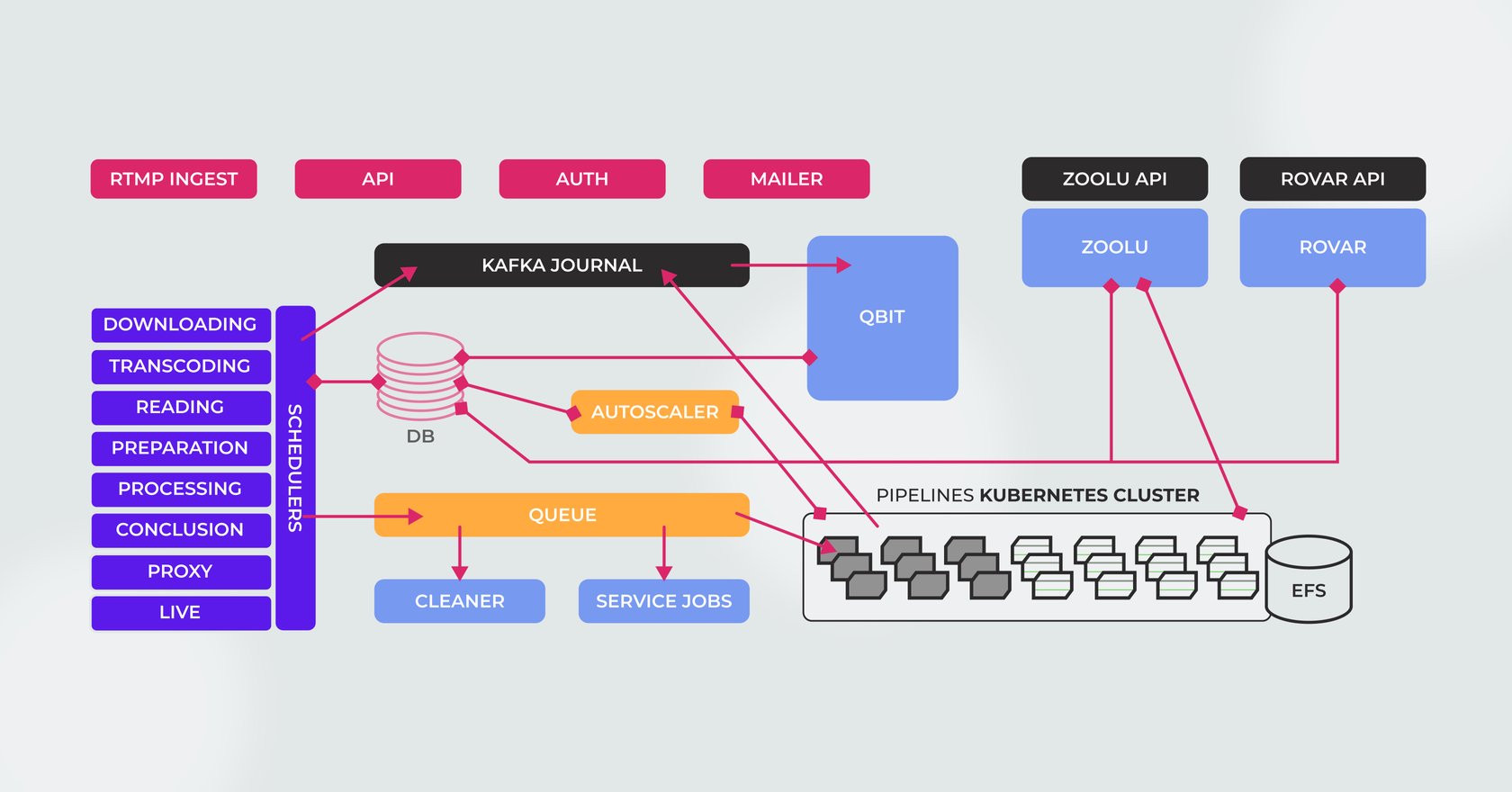

In our productization flow, we've built docker containers for each AI module and moved all productization functionality to a special SDK, which is supported independently. So our AI team can focus on math algorithms without being distracted by frame rate or file system issues.

Each module is configured for a specific pipeline depending on a business case.

As a part of our pipelines, we use the GPU transcoding module for the following purposes:

1. To optimize resizing processes for different deep learning and computer vision analyses.

2. To be insensitive to media container differences and broken meta.

We add special downloading media workers and media container parsers for the cloud productization pipeline and orchestrate them with Kubernetes.

The workers use EFS for data storage. They are connected to a queue of module segments and tasks created for them.

Explore the pontential of media automation!

The queue is controlled and reprioritized by a set of scheduling microservices connected to the central processing DB.

Platform events are registered in Kafka journal.

The QBIT (internal name) is the core microservice that is responsible for all business logic of our platform, including pipeline configuration and processing flows.

The QBIT controls all Kafka messages and makes corresponding changes in the DB.

In the outside communication layer, we have an independent RTP media server for live stream ingest.

To provide better platform usage, we’ve created a ZOOLU (internal name) microservice that is responsible for Kubernetes monitoring and the ROVAR microservice that can build dynamic web visualizers for any type of media cognitive automation output.

Our simplified workflow looks like this

1. The system gets a request for a new process.

2. The requested process gets registered in Kafka journal.

3. The pipeline starts downloading a video asset provided by the customer.

4. The downloaded file is transcoded into several files with different resolutions defined by the pipeline configuration.

A specific proxy file is created for better adaptation of media for our web visualizer UI.

5. The platform starts creating a task for pipeline stages.

Typically, we process media by multiple logical segments. The so-called ‘eyes’ workers deal with scaling and performance, and the ‘decision’ workers deal with the whole timeline representations.

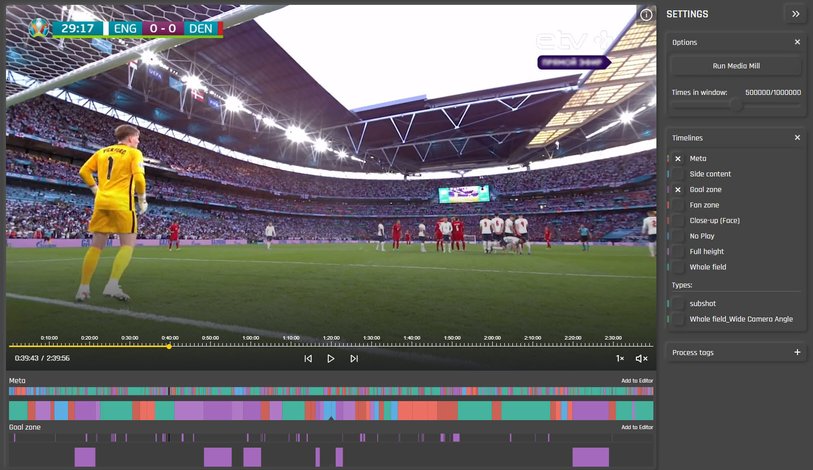

As a result of the generated decision, we get an instruction JSON file that contains metadata about highlight scenes, specific events, or time markers for post-production, etc., depending on the pipeline.

The EFS is cleaned automatically after the processing is done, and all the reusable files are moved to S3.

The generated JSON files with metadata can be taken to a customer infrastructure for further processing with third-party software, or they can be used in other Cognitive Mill™ pipelines.

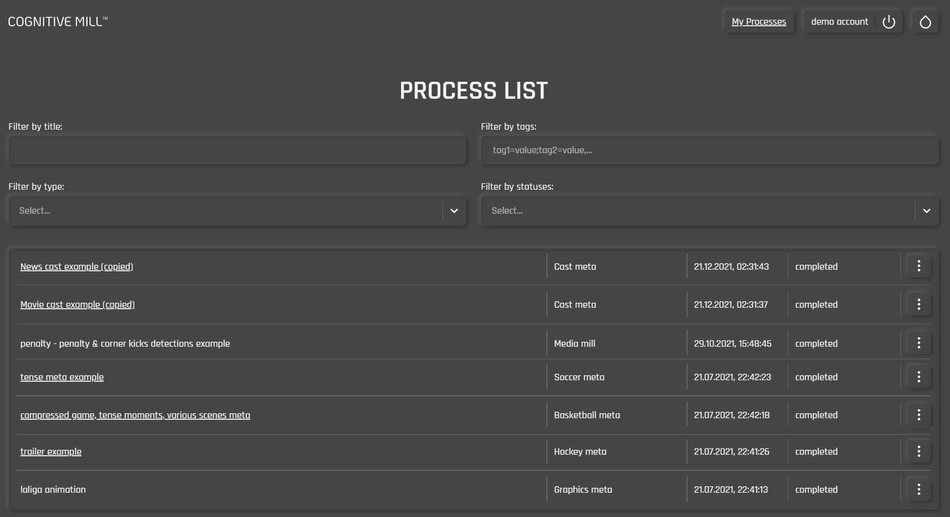

A new process can be created via the API or via the UI.

The Cognitive Mill™ platform has sophisticated pipeline and process management as well as monitoring, administration, and scaling options for each of our customers and our team.

What Cognitive Mill™ offers

Cognitive Mill™ offers unique products and solutions for OTT platforms, broadcasters, TV channels, telecoms, media producers, sports leagues, etc.

The cognitive computing cloud platform unites six available products:

- Cognitive Mill™ offers brilliant solutions for automated sports and movie highlight reels generation.

- Cognitive Mill™ has a great solution for side content skipping, intelligent ad insertion, and EPG correction.

- Cognitive Mill™ is handy for adapting horizontal videos to the portrait aspect ratio of social networks.

- Cognitive Mill™ summarizes video content and identifies main and secondary characters without extras and accidental people.

- Cognitive Mill™ detects repetitive moving graphics according to your sample in various live and recorded events.

- Cognitive Mill™ identifies nudity in video content and marks the ‘dangerous’ segments in the video timeline.

Wrapping up. Robot’s achievements

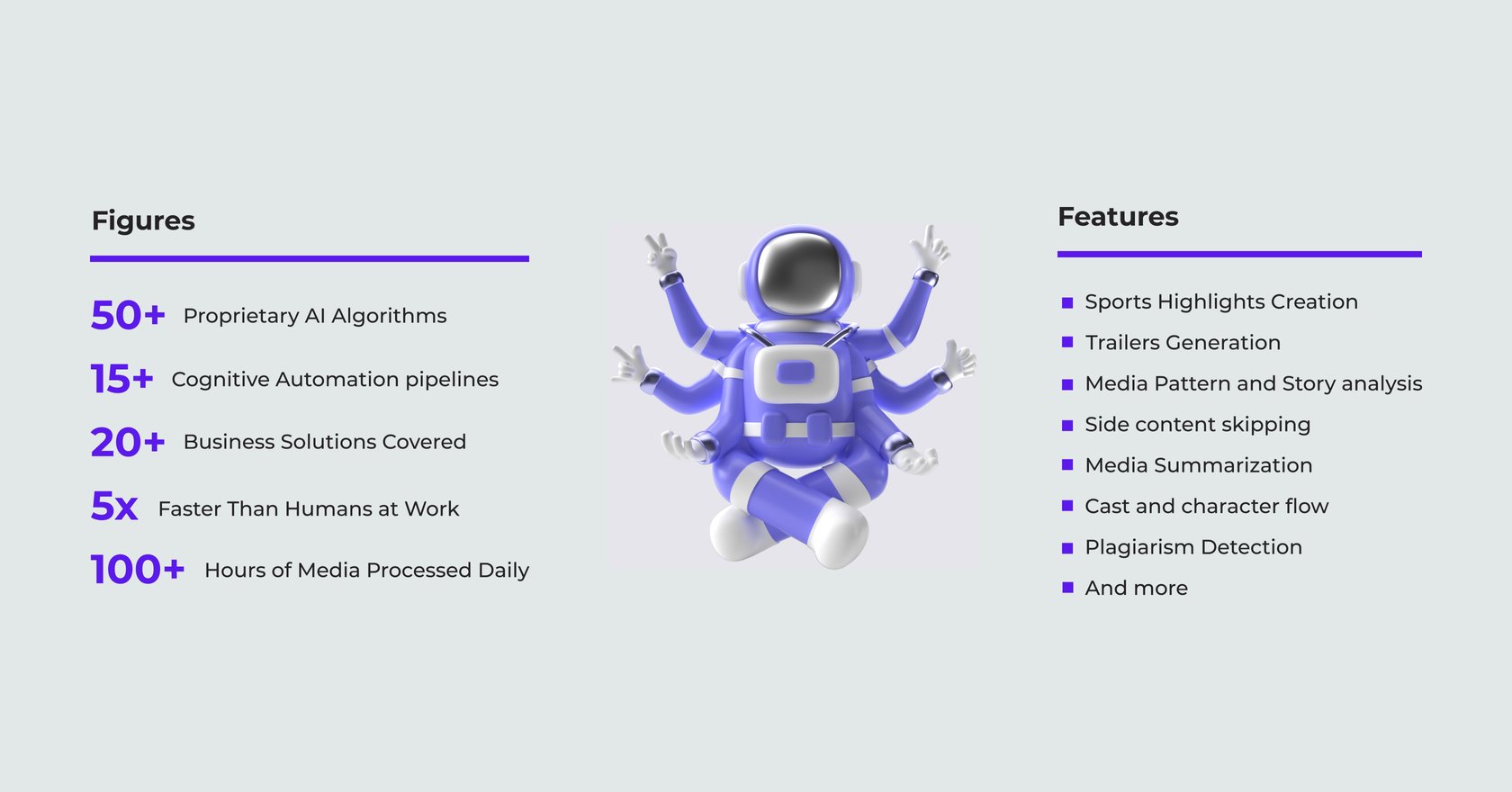

When it comes to technological achievements, numbers speak louder than words. For less than one year we’ve reached the following results:

- We’ve created more than 50 proprietary AI algorithms and more than 15 cognitive automation pipelines.

- Our efficient solutions have covered more than 20 business cases.

- The Cognitive Mill™ platform can process media 50 times faster than a human would.

- It already processes more than 100 hours of media for different purposes daily. We have just begun to analyze the market demand and understood that 100 hours is not enough. Our goal is 2000 hours of media per day.

Here is a brief summary of the Cognitive Mill™ features we have already delivered:

- Cognitive Mill™ can automatically generate top-notch sports highlight reels, movie teasers, and trailers.

- Cognitive Mill™ can summarize any type of media content, analyze the pattern of the story, and even the work of a camera operator.

- We’ve finalized the product that can differentiate the side content, like ads or closing credits, from the core action and provide skip recommendations.

- The platform can differentiate main characters from the secondary ones in movie scenes, sports events, TV shows, etc.

We are sure that our innovative technology can cover any use case of the Media & Entertainment industry. It is flexible by design, so we can easily customize the existing pipelines for your business cases. Cognitive business automation is real — and you can start using it today.

Visit run.cognitivemill.com to try it out. Don't hesitate to contact us to ask questions, share your ideas, suggestions and business needs, request a demo, or get a free trial.