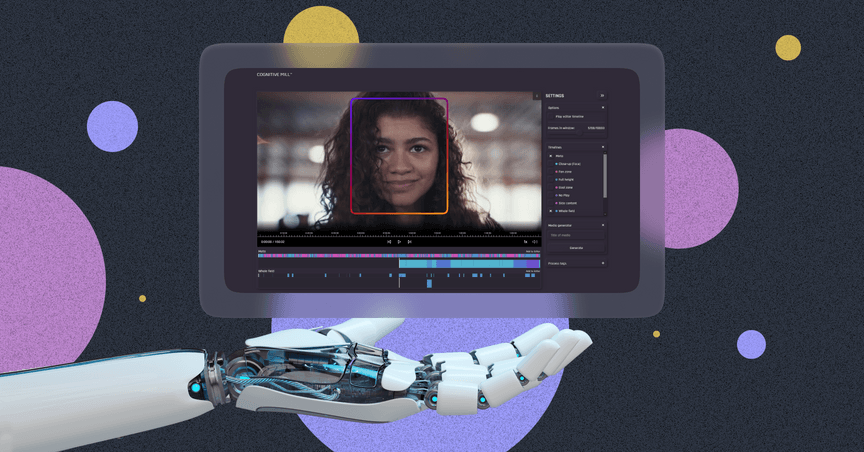

Evolving Cognitive Mill™ into a flexible media generation and editing product

Cognitive Mill™ is a Cognitive Mill product that generates output media files such as sports highlights, movie trailers, cropped videos, etc., based on the input video and the JSON file with the required metadata.

We’ve created it to be flexible and highly customizable so that clients can configure the media assembly process according to their business needs as well as use it in the fully automated mode. It’s fast and easy to implement new features into the refactored version of Cognitive Mill™.

But it has not always been like that. Of course, the AIHunters team wasn’t the first to create a media generation tool. But we’ve developed a product that could work equally well as an integrated part of the Cognitive Mill platform and as an independent media generator.

A bit of history

The history of Cognitive Mill™ evolution has three milestones.

1. One service completed operations stage by stage using the file system to read the segments and store temporary files.

In the first iteration, we used one service where the original video consequently went through several stages.

In the first stage, the video was split into frames.

In the second stage, the audio track was extracted.

Then the required frames were selected and processed with OpenCV to apply different effects. And then, they were transmitted to the external program — FFmpeg to merge the frames and the audio into the output video.

The stages followed one another, which wasn’t fast enough.

2. Multiple services simultaneously completed operations using the file system to read the segments and store temporary files.

The team decided to change the approach and use multiple services that may start and do their job simultaneously instead of running consecutive stages within one service.

The use of multiple services and the parallelization of stage operations accelerated the performance.

But we discovered that the real bottleneck was in the way we utilized the file system using it as buffer storage for frames.

The raw files were decoded and saved in the file system, which used much of the file system resources.

The optimization of the system meant complete refactoring. And the AIHunters team accepted the challenge.

3. One service consequently completed operations stage by stage, using the RAM to store temporary data and the file system for reading and saving the segments for the output video.

We’ve stopped saving temporary files, especially raw frames, in the file system and used the RAM to store temporary data. Exploiting the file system took a lot more resources and used to slow down the processing.

Changing the temporary data storage from the file system to RAM made the system work more efficiently.

Now, we read the frame, decode it and instantly transmit it to save to the final video.

And though only one service is used now, this change has significantly reduced the processing time.

Evolution in numbers

And here’s the performance evolution in numbers. The trailer generation time was reduced from 47.1 seconds to 6.6 seconds.

In the first implementation, the processing time took 47.1 seconds.

The usage of multiple services in parallel in the second iteration reduced the time to 19.0 seconds.

And the final iteration, when we started to use RAM instead of the file system three times increased the speed so that the process now takes only 6.6 seconds.

The current version

The refactoring we’ve made allowed us to make the system more flexible and customizable.

Except for the basic frame splitting and merging functionality, we can easily include additional parameters for effects into the configuration, such as a watermark, custom intro and outro, transition time, and color with or without a logo, as shown in the example below.

The team created an internal mechanism that enables the easier implementation of new features.

The evolution of Cognitive Mill™ has been relatively fast due to the usage of Python with multiple external libraries. Python is considered slow, but we didn’t actually lose in speed but definitely won in flexibility and fast implementation of new features.

In the current version of Cognitive Mill™, we’ve managed to level it up from a simple media generation tool that was able to only split and merge the given segments to a product that can be edited and customized according to the client’s business needs.

The default configuration allows clients to customize a watermark size, position, transparency, transition time, color, and logo to be shown between the segments if required. It also gives a possibility to add an intro and outro, as in the example below.

"config": {

"config": {

"logo":

{

"path": "./data/logo/LogoAIH3.png",

"position": "right_down",

"alpha": 0.5,

"size": [150, 38]

},

"fade": {

"color_rgb": [

0,

0,

0

],

"transition_frames_number": 5

},

"alpha_transition":

{

"video_meta_list":

[

{

"dar": "16/9",

"video_path": "/app/data/animation/Bounce between videos frames/new 16_9/"

}

]

},

"intro":

{

"video_meta_list":

[

{

"dar":"16/9",

"video_path":"/app/data/animation/Videos openingin /Video-openingin[16_9].mp4"

}

]

},

"outro":

{

"video_meta_list":

[

{

"dar":"16/9",

"video_path":"/app/data/animation/Videos ending/Video-ending[16_9].mp4"

}

]

},

"segment_rounding":

{

"start": "floor",

"end": "floor"

}

}

And the trailer.json file includes the type of trailer and the start and end time for segments as shown in the snippet below.

{

"data": [

{

"id": "diversity_trailer",

"segments": [

{

"end": {

"ms": 820892,

"time": "0:13:40.892000"

},

"repr_ms": 818000,

"start": {

"ms": 816680,

"time": "0:13:36.680000"

},

"story": 4,

"type": "shot"

},

{

"end": {

"ms": 1126083,

"time": "0:18:46.083000"

},

"repr_ms": 1122340,

"start": {

"ms": 1121040,

"time": "0:18:41.040000"

},

"story": 6,

"type": "shot"

},

Here’s an example of a trailer automatically generated by Cognitive Mill™ on the basis of the given trailer.json file.

Lessons learned

1. Don’t fear change.

We decided not to stick to the initial version, changed the logic and philosophy of the product, and made the complete refactoring of Cognitive Mill™. This decision resulted in better performance and flexibility for further development and implementation of new features.

2. Combine integration and independence.

Cognitive Mill™, at first, was meant as a part of Cognitive mill as a media generation tool integrated into the platform. But the team also considered the possibility to evolve it into an independent product that can be used outside the platform.

What is more, we deliberately created a product that can be easily operated by both humans and machines. Clients can customize the configuration to create a video according to their needs. Or the output media can be generated in the fully automated mode.

3. Be flexible.

We chose Python to gain more flexibility and enable fast implementation of new features due to the usage of multiple external libraries.

4. Create and adapt.

We write code to perform custom manipulations with frames, and at the same time, we effectively use already existing programs for common operations such as merging audio and video, applying filters to audio tracks, etc. So we combine existing programs like FFmpeg and libraries such as OpenCV, creating wrappers with customizable configurations to integrate them into our system. This approach has proven to be convenient and effective.

Brave plans for the future

The flexibility of the system and the choice of Python as the main language for Cognitive Mill™ allow us to use the libraries and promptly create prototypes, add new features, and develop the product.

And we have a lot of brave plans to develop it as an editing tool.

We consider adding filters to edit the visual part, uploading external tracks, and then applying custom audio tracks to the video.

It might even be possible to allow third parties to introduce their Python code that will perform specific operations.

Cognitive Mill™, as a part of the Cognitive Mill platform, is a sufficient and extendable secondary framework for extractive summarization. It can solve various cognitive automation business cases in the media and entertainment industry.

And now, it is a real milestone in AIHunters' history: we are creating Cogniverse for the abstractive generation of 3D visual content with the help of 3D rendering. So that all the AI power of Cognitive Mill is able not only to deal with 2D automated editing but also to solve our clients’ tasks in 3D.

We tried, experimented, made mistakes, learned from them, and managed to achieve impressive results. And the best is yet to come. Learn more about how to use Cognitive Mill™, and don’t hesitate to try it out at run.cognitivemill.com.